When you're ready to run CockroachDB in production in a single region, it's important to deploy at least 3 CockroachDB nodes to take advantage of CockroachDB's automatic replication, distribution, rebalancing, and resiliency capabilities.

If you haven't already, review the full range of topology patterns to ensure you choose the right one for your use case.

Before you begin

- Multi-region topology patterns are almost always table-specific. If you haven't already, review the full range of patterns to ensure you choose the right one for each of your tables.

- Review how data is replicated and distributed across a cluster, and how this affects performance. It is especially important to understand the concept of the "leaseholder". For a summary, see Reads and Writes in CockroachDB. For a deeper dive, see the CockroachDB Architecture Overview.

- Review the concept of locality, which CockroachDB uses to place and balance data based on how you define replication controls.

- Review the recommendations and requirements in our Production Checklist.

- This topology doesn't account for hardware specifications, so be sure to follow our hardware recommendations and perform a POC to size hardware for your use case. For optimal cluster performance, Cockroach Labs recommends that all nodes use the same hardware and operating system.

- Adopt relevant SQL Best Practices to ensure optimal performance.

Configuration

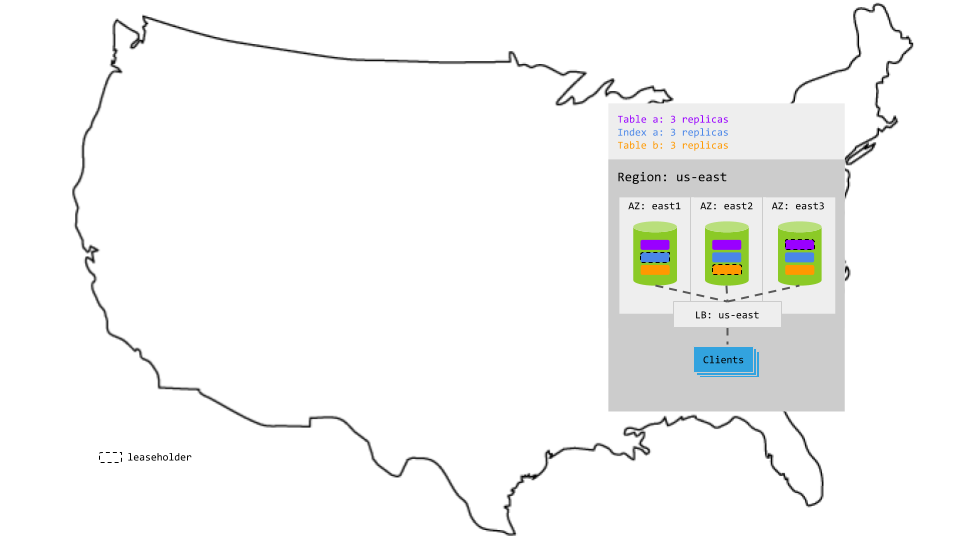

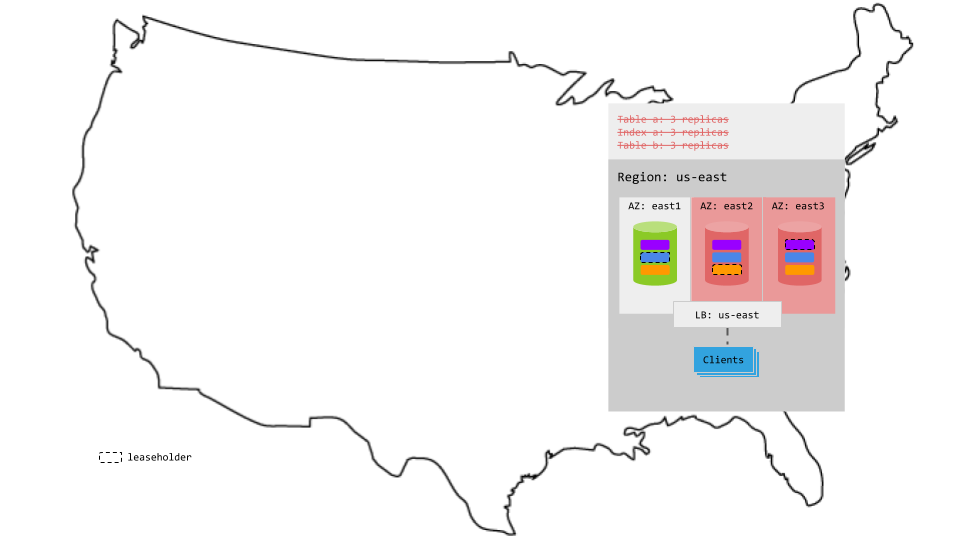

Provision hardware as follows:

- 1 region with 3 AZs

- 3+ VMs evenly distributed across AZs; add more VMs to increase throughput

- App and load balancer in same region as VMs for CockroachDB

- The load balancer redirects to CockroachDB nodes in the region

Start each node on a separate VM, setting the

--localityflag to the node's region and AZ combination. For example, the following command starts a node in the east1 availability zone of the us-east region:$ cockroach start \ --locality=region=us-east,zone=east1 \ --certs-dir=certs \ --advertise-addr=<node1 internal address> \ --join=<node1 internal address>:26257,<node2 internal address>:26257,<node3 internal address>:26257 \ --cache=.25 \ --max-sql-memory=.25 \ --background

With the default 3-way replication factor and --locality set as described, CockroachDB balances each range of table data across AZs, one replica per AZ. System data is replicated 5 times by default and also balanced across AZs, thus increasing the resiliency of the cluster as a whole.

Characteristics

Latency

Reads

Since all ranges, including leaseholder replicas, are in a single region, read latency is very low.

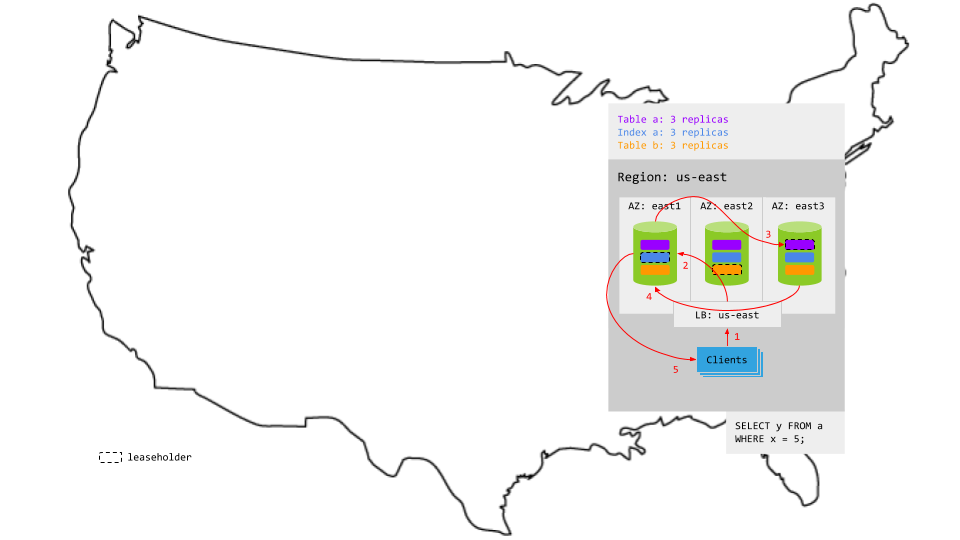

For example, in the animation below:

- The read request reaches the load balancer.

- The load balancer routes the request to a gateway node.

- The gateway node routes the request to the relevant leaseholder.

- The leaseholder retrieves the results and returns to the gateway node.

- The gateway node returns the results to the client.

Writes

Since all ranges are in a single region, writes achieve consensus without leaving the region and, thus, write latency is very low as well.

For example, in the animation below:

- The write request reaches the load balancer.

- The load balancer routes the request to a gateway node.

- The gateway node routes the request to the leaseholder replicas for the relevant table and secondary index.

- While each leaseholder appends the write to its Raft log, it notifies its follower replicas.

- In each case, as soon as one follower has appended the write to its Raft log (and thus a majority of replicas agree based on identical Raft logs), it notifies the leaseholder and the write is committed on the agreeing replicas.

- The leaseholders then return acknowledgement of the commit to the gateway node.

- The gateway node returns the acknowledgement to the client.

Resiliency

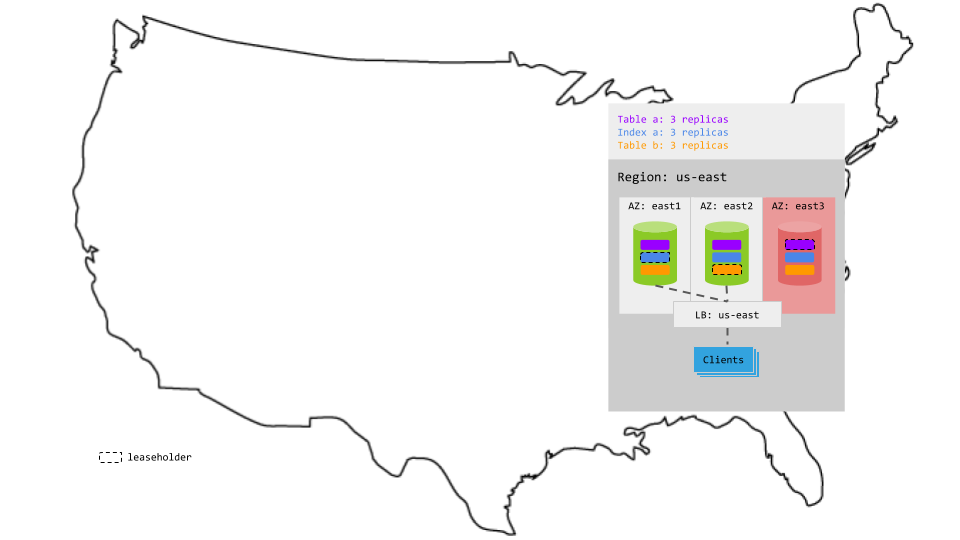

Because each range is balanced across AZs, one AZ can fail without interrupting access to any data:

However, if an additional AZ fails at the same time, the ranges that lose consensus become unavailable for reads and writes:

See also

- Multi-Region Capabilities Overview

- How to Choose a Multi-Region Configuration

- When to Use

ZONEvs.REGIONSurvival Goals - When to Use

REGIONALvs.GLOBALTables - Low Latency Reads and Writes in a Multi-Region Cluster

- Migrate to Multi-Region SQL

- Topology Patterns Overview

- Single-region patterns

- Multi-region patterns