As we outlined in Deploy a Multi-Region Application in Just 3 Steps, we’ve made major changes to simplify the multi-region configuration in CockroachDB. The new abstractions allow users to think of multi-region databases and tables in three ways:

The regions in which the database should reside

The survivability goal of the database

The latency requirements for each table

Under the hood, however, the system still must be concerned with more fundamental aspects of multi-region applications and deployments such as where to place replicas, how many replicas to create for each table, and how to keep track of the regions within the database. In this blog post we outline some of the challenges our engineering team had to deal with in simplifying the multi-region experience for CockroachDB users.

How to deploy multi-region applications in CockroachDB

CockroachDB has supported multi-region deployments since its first release, however, historically they’ve been difficult to configure. In the past, users have had to specify the regions in which their nodes resided, and then remember where the nodes were placed (introspection of node regions was not supported with a SHOW command). With the nodes placed, users would then have to manually craft zone configurations for each database, table, index and possibly table partition, to ensure that the number of replicas and the replica placement would achieve the user’s latency and survival goals. This was a complex and error prone process, as a single mistake in any of the zone configuration settings would mean that the database didn’t perform as desired. In short, there were improvements we could make to the usability of multi-region deployments, which motivated our efforts in 21.1.

Our goals for 21.1 were to:

Simplify the process of placing nodes in regions, and tracking where nodes were placed

Allow users to clearly state a survival goal for the database, in terms they’d understand

Dramatically simplify the configuration of tables to achieve latency goals and eliminate any need to modify zone configurations directly

How to keep track of database regions

Every node in a CockroachDB cluster can be started with locality information denoting its region and availability zone. As such, cluster topologies are dynamic as new nodes can be added and existing nodes can be removed from the cluster. The regions comprising the cluster topology at any point can be viewed using the SHOW REGIONS FROM CLUSTER command.

demo@127.0.0.1:26257/defaultdb> SHOW REGIONS FROM CLUSTER;

region | zones

---------------+----------

europe-west1 | {b,c,d}

us-east1 | {b,c,d}

us-west1 | {a,b,c}

Time: 81ms total (execution 81ms / network 0ms)

In contrast, all multi-region patterns work in the context of a set of static database regions. In 21.1, we introduced new flavours to the ALTER DATABASE and CREATE DATABASE commands to bridge this gap. Users can now declare which regions their database operates in by adding and removing them using declarative SQL.

demo@127.0.0.1:26257/defaultdb> create database multi_region primary region "us-east1" regions "europe-west1";

CREATE DATABASE

Time: 25ms total (execution 25ms / network 0ms)

demo@127.0.0.1:26257/defaultdb> show regions from database multi_region;

database | region | primary | zones

---------------+--------------+---------+----------

multi_region | us-east1 | true | {b,c,d}

multi_region | europe-west1 | false | {b,c,d}

(2 rows)

Time: 18ms total (execution 18ms / network 0ms)

Internally, CockroachDB uses an enum to keep track of the regions a particular database operates in. We create a special internal type, called crdb_internal_region, the very first time a user “goes multi-region” with their database (either at database creation, or through an ALTER DATABASE SET PRIMARY REGION statement). Internally, this works very similar to user defined types, except the multi-region enum also manages some metadata. Additionally, directly altering this type is disallowed as the database must have full control over the set of database regions.

demo@127.0.0.1:26257/defaultdb> SHOW ENUMS FROM multi_region.public;

schema | name | values | owner

---------+----------------------+----------------------------------+--------

public | crdb_internal_region | {europe-west1,us-east1} | demo

(1 row)

Time: 34ms total (execution 34ms / network 0ms)

At this point you might be wondering why bother with this special enum? Why not save some strings somewhere and be done with it? Well, for a couple of reasons. The first is that having an enum type allows us to create columns of that type, and this is how we power REGIONAL BY ROW tables (described in more detail below).

Enums also enable us to provide reasonable semantics around dropping regions. Underneath the hood, region removal is powered by the ALTER TYPE … DROP VALUE statement. Similar to the other multi-region abstractions being talked about in this blog post, ALTER TYPE … DROP VALUE isn’t supported in Postgres - it’s a CockroachDB extension that we implemented in the 21.1 release to enable the region management use-case. That being said, as a feature, it stands independent in its own right. Dropping enum values, and regions, leverages the schema change infrastructure in CockroachDB.

The semantics around dropping regions are that users are only allowed to drop a region if no data is explicitly localized in that region. Specifically, say a user is trying to drop the region “us-east” from their database. This will only succeed if no REGIONAL table is homed entirely in “us-east” and no REGIONAL BY ROW table has a row in “us-east”. Leveraging the schema change infrastructure that powers enums allows CockroachDB to perform this validation without disrupting foreground traffic.

How to maintain high availability and survive zone & region failures

One of the main reasons that users seek out multi-region deployments is for high availability and greater failure tolerance. When a database runs on a single node, it clearly cannot tolerate even a single node failure (if the node it’s running on fails, the database becomes unavailable). When you scale a database out to multiple nodes, it can begin to survive a single node failure, which is better, but not sufficient for many use cases.

As a database scales out to multiple nodes, across different availability zones, it can begin to survive the failure of a full availability zone (say, in the case of a building fire, a network outage around the data center, or even human error). As it scales out beyond availability zones to multiple regions, it can now begin to tolerate full region failure (say, in the case of a region-level network outage or a natural disaster), which makes it very unlikely that the database will become unavailable due to failures.

CockroachDB allows users to specify whether or not their database should be able to survive an availability zone failure or a region failure. Under the covers, these goals are satisfied using the zone configuration infrastructure. Whereas in the past, users had to set these zone configurations manually to ensure failure tolerance, in 21.1 these are set automatically to reduce the burden on the user.

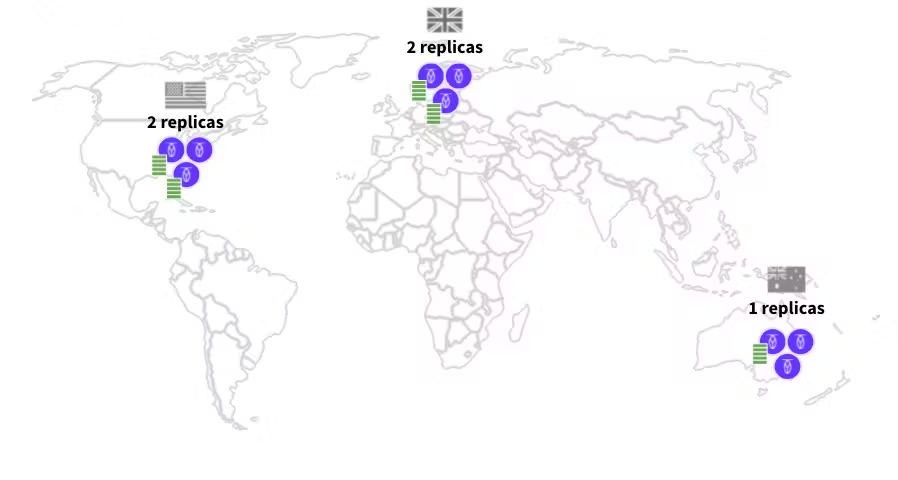

ZONE survivability (the default)

By default, a database is created with ZONE survivability. This means that the system will create a zone configuration with at least 3 replicas, and will spread these replicas out amongst the available regions defined in the database. This is illustrated in the figure below where we have a database configured with ZONE survivability which has placed three replicas in three separate availability zones in the US region (the US region is the database’s primary region). This means that writes in the US region will be fast (as they won’t need to replicate out of the region) but writes from the UK and Australia region will need to consult the US region.

As we’ll discuss in more detail in an upcoming blog post, the system will also place additional non-voting replicas to guarantee that stale reads from all regions can be served locally.

[Image 1: Example of a multi-region CockroachDB deployment distributed in the US, the UK, and Australia with ZONE survivability (the default fault tolerance level) selected.]

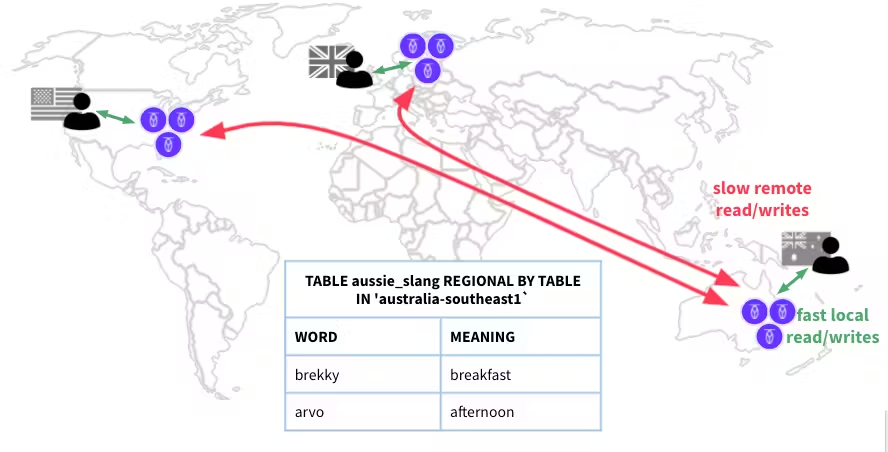

Upgrade to REGION survivability

If the user chooses to upgrade to REGION survivability, the database will create five replicas of the data. The additional replicas are designed not only to allow for greater fault tolerance but also to ensure that writes can satisfy their quorum replication requirements by consulting only one additional region (relative to write’s leaseholder). This is made possible by placing two replicas in the write-optimized region (in the diagram below, that’s the US region), and spreading the remaining replicas out over the other regions. The end result is that the need for consulting three out of the five replicas to achieve quorum can be handled by consulting only a single region aside from the write-optimized region.

For example, in the figure below, writes to the US region need only consult either the UK region or the Australia region to achieve quorum. An additional benefit to placing replicas this way is that in the event of an availability zone failure in the US region, there remains one replica in that region to take over leaseholder responsibilities, and continue to service low-latency consistent reads from that region.

[Image 2: Example of a multi-region CockroachDB deployment distributed in the US, the UK, and Australia with REGION survivability (the upgraded fault tolerance level) selected.]

How to upgrade from ZONE to REGION survivability

As mentioned above, databases by default are created with ZONE survivability, but can be changed to REGION survivability either at database creation time, or via an ALTER DATABASE statement. To upgrade to REGION survivability, a database must have at least three regions, as it’s not possible to place replicas in only two regions and ensure that if one of those regions fails the database remains available.

How to specify low-latency access by table

Another key decision when creating a multi-region database is to optimize the latency of reading or writing data based on your workload. Prior to v21.1, developers would need to define partitions and zone configurations on each table manually to specify their desired multi-region setup that best optimizes access to their data. This was cumbersome and prone to error.

In 21.1 we’ve introduced three table-level locality configurations that help you control this: REGIONAL BY TABLE, REGIONAL BY ROW and GLOBAL. These are specified in the existing CREATE TABLE syntax:

CREATE TABLE aussie_slang (word TEXT PRIMARY KEY, meaning TEXT) LOCALITY REGIONAL BY TABLE IN 'australia-southeast1'CREATE TABLE users (id INT PRIMARY KEY, username string) LOCALITY REGIONAL BY ROWCREATE TABLE promo_codes (code TEXT PRIMARY KEY, discount INT) LOCALITY GLOBAL

With these simple keywords, partitions and zone configurations are automatically configured underneath. These will adapt to any region changes specified on your database.

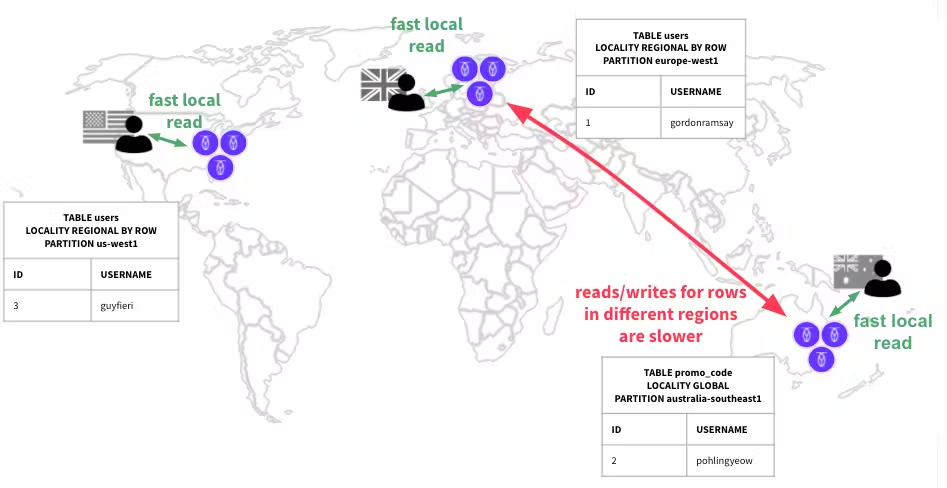

REGIONAL BY TABLE

For tables with locality REGIONAL BY TABLE, all data in a given table will be optimized for reads and writes in a single region by placing voting replicas and leaseholders there. This is useful if your data is usually read/written in only one region, and the round trip across regions is acceptable in the rare cases where data is accessed from a different region.

[Image 3: Example of a multi-region CockroachDB deployment that uses REGIONAL BY TABLE to optimize for reads and writes within one region.]

In a multi-region database, REGIONAL BY TABLE IN PRIMARY REGION is the default locality for tables if the LOCALITY is not specified during CREATE TABLE (or if an existing database is converted to a multi-region database by adding a primary region). You can specify which region you wish this data to live in by specifying REGIONAL BY TABLE IN .

Under the covers, REGIONAL BY TABLE leverages the zone configuration infrastructure to pin the leaseholder in the specified region. If the database is specified to use ZONE survivability, it will place all three voting replicas (along with the leaseholder) in the specified region to ensure that writes can be acknowledged without a cross-region network hop. If the database is specified to use REGION survivability, two voting replicas (and the leaseholder) will be placed in the specified region, and the remaining three voting replicas will be spread out throughout the remaining regions.

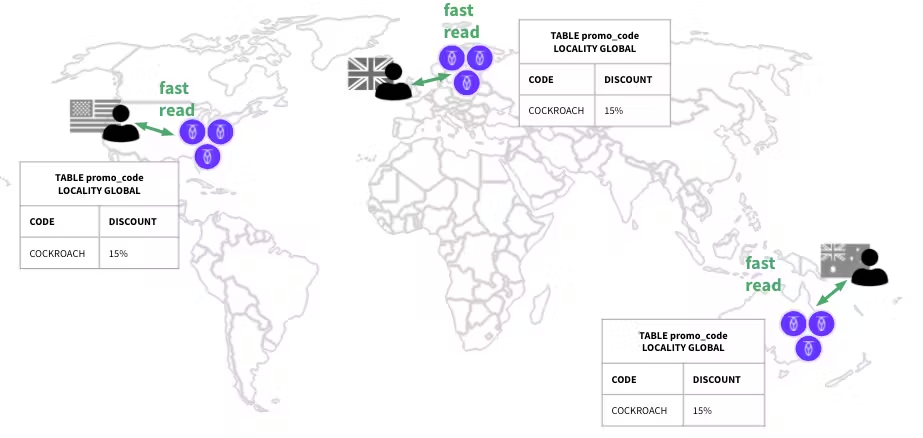

REGIONAL BY ROW

For tables with locality REGIONAL BY ROW, individual rows can be homed to a region of your choosing. This is useful for tables where data should be localized for the given application.

[Image 4: Example of a multi-region CockroachDB deployment that uses REGIONAL BY ROW to home individual rows to a specified location.]

To make this possible, a new hidden column crdb_region is introduced to every REGIONAL BY ROW table, with the type of the column being the crdb_internal_region enum mentioned above. By default, the value of this column for each inserted row will be set to the gateway region (the region in which the INSERT was performed).

You can optionally choose to specify the crdb_region explicitly in your INSERT clause (e.g. INSERT INTO users (crdb_region, username) VALUES ('australia-southeast1', 'russell coight'), which will insert that row into the specified region. In addition to the hidden column, REGIONAL BY ROW tables (and all of their indexes) are automatically partitioned so that there’s one partition for each possible region. The partitions are then pinned to the specified region by assigning a zone configuration. Partitions are automatically added or dropped when regions are re-configured on the database.

Another option is to make crdb_region a computed column, which can take properties from another column to dictate the region. You can then use REGIONAL BY ROW AS to use the given column in place of crdb_region. For example, if there were a country column for a given user, an appropriate computed column may be:

ALTER TABLE users ADD COLUMN crdb_region_col crdb_internal_region AS

CASE

IF country IN ('au, 'nz') THEN 'ap-southeast1'

IF country IN ('uk', 'nl') THEN 'europe-west1'

ELSE 'us-east1'

END

STORED

In the past, REGIONAL BY ROW tables required that the user manually partition the table and apply zone configurations for each partition, which was labor intensive and error prone. Furthermore, it required developers to explicitly specify a “region” as a prefix to their PRIMARY KEY for partitioning to work, which meant developers had to account for this column to service certain queries in an optimized fashion.

In CockroachDB 21.1, we introduced two new concepts for supporting REGIONAL BY ROW and addressing the past pain points – implicit partitioning and locality optimized search. This will also be discussed in detail in an upcoming blog post.

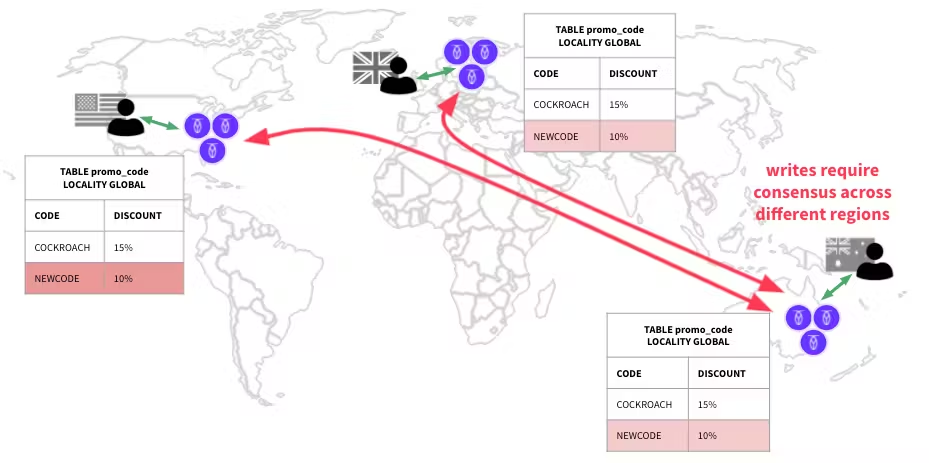

GLOBAL

For tables with locality GLOBAL, we introduced a new “global read” protocol that allows for reliably fast consistent reads in all regions. However, writes are more expensive as they require coordination across nodes in different regions. The protocol is useful if you have a table which has a low volume of writes but high volumes of reads, and from different regions.

[Image 5: Example of a multi-region CockroachDB deployment that includes tables with locality GLOBAL. In this case, reads are fast and consistent in all regions, but writes are more expensive.]

[Image 6: Example of a multi-region CockroachDB deployment that includes tables with locality GLOBAL. To support fast historical reads, non-voting replicas have been placed in each region.]

For global tables, the voting replicas are all placed in the primary region. To support fast present time reads, however, we also place non-voting replicas on remote regions. This configuration, coupled with some changes we made to the transaction protocol of global tables, ensures that we can service consistent reads in all regions, at all times.

Support for GLOBAL was not possible before v21.1. We had two similar concepts which achieved similar goals with different tradeoffs:

Follower reads, which can only serve a consistent view of data at a historical point in time. As a result, they cannot be used in read/write transactions.

Duplicate indexes, which allowed indexes to be stored locally in different regions for faster read access. However, this has the drawback of taking extra space, and extra consensus to achieve, which has the potential to cause transaction retries.

The implementation of the “global read” protocol will be detailed in an upcoming blog post.

Diving even deeper

The changes we’ve described here are just the tip of the iceberg of what the multi-region team at Cockroach Labs has been working on in 21.1. As mentioned above, in future blog posts we’re going to dive deeper into the inner workings of replica placement for multi-region databases, the novel transaction protocol we implemented to support GLOBAL tables, and the details of how REGIONAL BY ROW tables work.

And of course, if solving complex multi-region problems is something that excites you, you might want to check out opportunities to join our team.