This page describes how major-version and patch upgrades work and shows how to upgrade a self-hosted CockroachDB.

To upgrade a cluster in CockroachDB Cloud, refer to Upgrade a cluster in CockroachDB Cloud instead.

To upgrade a cluster with with physical cluster replication (PCR), refer to Upgrade a Cluster Running PCR.

Overview

Types of upgrades

Major-version upgrades: A major-version upgrade, such as from v24.2 to v24.3, may include new features, updates to cluster setting defaults, and backward-incompatible changes. Performing a major-version upgrade requires an additional step to finalize the upgrade.

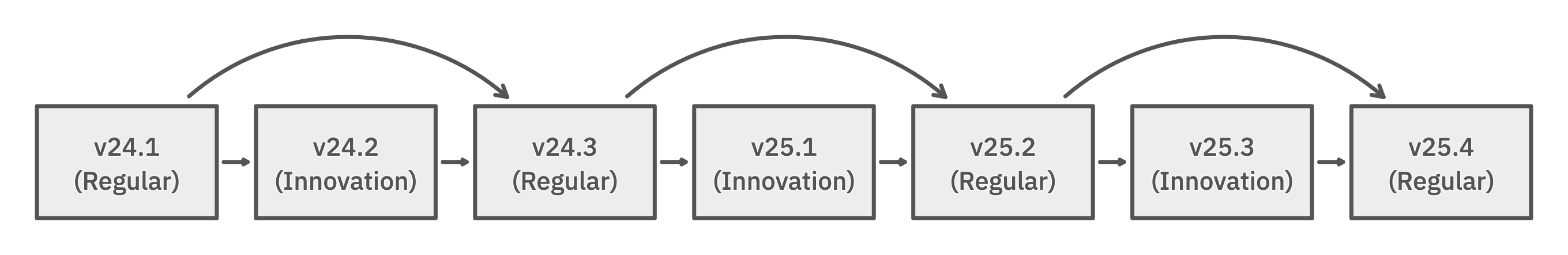

As of 2024, every second major version is an Innovation release. Innovation releases offer shorter support windows and can be skipped.

Patch upgrades: A patch upgrade moves a cluster from one patch release to another within a major version, such as from v24.2.3 to v24.2.4. Patch upgrades do not introduce backward-incompatible changes.

A major version of CockroachDB has two phases of patch releases: a series of testing releases (beta, alpha, and RC releases) followed by a series of production releases (vX.Y.0, vX.Y.1, and so on). A major version’s first production release (the .0 release) is also known as its GA release.

To learn more about CockroachDB major versions and patches, refer to the Releases Overview.

Compatible versions

A cluster may always be upgraded to the next major release. Every second major version is an Innovation release that can be deployed or skipped:

If your cluster is running a major version that is a Regular release, it can be upgraded to either:

- the next major version (an Innovation release)

- the release that follows the next major version (the next Regular release, once it is available, skipping the Innovation release).

If a cluster is running a major version that is labeled an Innovation release, it can be upgraded only to the next Regular release.

CockroachDB v25.1 is an Innovation release. To upgrade to it, you must be running v24.3, the previous Regular release.

Before continuing, upgrade to v24.3.

CockroachDB's multi-active availability means that your cluster remains available while you upgrade one node at a time in a rolling fashion. While a node is being upgraded, its resources are not available to the cluster.

A major-version upgrade involves the following high-level steps, which are described in detail in the following sections.

- On one node at a time:

- Replace the previous

cockroachbinary or container image with the new binary. - Restart the

cockroachprocess and verify that the node has rejoined the cluster.

- Replace the previous

When all nodes have rejoined the cluster:

- For a patch upgrade within the same major version, the upgrade is complete.

- For a major-version upgrade, the upgrade is not complete until it is finalized. Certain features and performance improvements, such as those requiring changes to system schemas or objects, are not available until the upgrade is finalized. Refer to the v26.1 Release Notes for details.

For self-hosted CockroachDB, automatic finalization is enabled by default, and begins as soon as all nodes have rejoined the cluster using the new binary. If you need the ability to roll back a major-version upgrade, you can disable auto-finalization and finalize the upgrade manually.

Once a major-version upgrade is finalized, the cluster cannot be rolled back to the prior major version.

Prepare to upgrade

Before beginning a major-version or patch upgrade:

- Verify the overall health of your cluster using the DB Console:

- Under Node Status, make sure all nodes that should be live are listed as such. If any nodes are unexpectedly listed as

SUSPECTorDEAD, identify why the nodes are offline and either restart them or decommission them before beginning your upgrade. If there areDEADand non-decommissioned nodes in your cluster, the upgrade cannot be finalized. If any node is not fully decommissioned, try the following:- First, reissue the decommission command. The second command typically succeeds within a few minutes.

- If the second decommission command does not succeed, recommission and then decommission it again. Before continuing the upgrade, the node must be marked as

decommissioned.

- Under Replication Status, make sure there are

0under-replicated and unavailable ranges. Otherwise, performing a rolling upgrade increases the risk that ranges will lose a majority of their replicas and cause cluster unavailability. Therefore, it's important to identify and resolve the cause of range under-replication and/or unavailability before beginning your upgrade. - In the Node List, make sure all nodes are on the same version. Upgrade them to the cluster's current version before continuing. If any nodes are behind, this also indicates that the previous major-version upgrade may not be finalized.

- In the Metrics dashboards, make sure CPU, memory, and storage capacity are within acceptable values for each node. Nodes must be able to tolerate some increase in case the new version uses more resources for your workload. If any of these metrics is above healthy limits, consider adding nodes to your cluster before beginning your upgrade.

- Under Node Status, make sure all nodes that should be live are listed as such. If any nodes are unexpectedly listed as

- Make sure your cluster is behind a load balancer, or your clients are configured to talk to multiple nodes. If your application communicates with only a single node, stopping that node to upgrade its CockroachDB binary will cause your application to fail.

By default, the storage engine uses a compaction concurrency of 3. If you have sufficient IOPS and CPU headroom, you can consider increasing this setting via the

COCKROACH_COMPACTION_CONCURRENCYenvironment variable. This may help to reshape the LSM more quickly in inverted LSM scenarios; and it can lead to increased overall performance for some workloads. Cockroach Labs strongly recommends testing your workload against non-default values of this setting.CockroachDB is designed with high fault tolerance. However, taking regular backups of your data is an operational best practice for disaster recovery planning. Refer to Restoring backups across versions.

Review the v24.3 Release Notes, as well as the release notes for any skipped major version. Pay careful attention to the sections for backward-incompatible changes, deprecations, changes to default cluster settings, and features that are not available until the upgrade is finalized.

Optionally disable auto-finalization to preserve the ability to roll back a major-version upgrade instead of finalizing it. If auto-finalization is disabled, a major-version upgrade is not complete until it is finalized.

Ensure you have a valid license key

To perform major version upgrades, you must have a valid license key.

Patch version upgrades can be performed without a valid license key, with the following limitations:

- The cluster will run without limitations for a specified grace period. During that time, alerts are displayed that the cluster needs a valid license key. For more information, refer to the Licensing FAQs.

- The cluster is throttled at the end of the grace period if no valid license key is added to the cluster before then.

If you have an Enterprise Free or Enterprise Trial license, you must enable telemetry using the diagnostics.reporting.enabled cluster setting, as shown below in order to finalize a major version upgrade:

SET CLUSTER SETTING diagnostics.reporting.enabled = true;

If a cluster with an Enterprise Free or Enterprise Trial license is upgraded across patch versions and does not meet telemetry requirements:

- The cluster will run without limitations for a 7-day grace period. During that time, alerts are displayed that the cluster needs to send telemetry.

- The cluster is throttled if telemetry is not received before the end of the grace period.

For more information, refer to the Licensing FAQs.

If you want to stay on the previous version, you can roll back the upgrade before finalization.

Perform a patch upgrade

To upgrade from one patch release to another within the same major version, perform the following steps on one node at a time:

- Replace the

cockroachbinary on the node with the binary for the new patch release. For containerized workloads, update the deployment's image to the image for the new patch release. - Restart CockroachDB on the node.

- Verify that the node has rejoined the cluster.

Ensure that the node is ready to accept a SQL connection.

Unless there are tens of thousands of ranges on the node, it's usually sufficient to wait one minute. To be certain that the node is ready, run the following command:

cockroach sql -e 'select 1'

When all nodes are running the new patch version, the upgrade is complete.

Roll back a patch upgrade

To roll back a patch upgrade, repeat the steps in Perform a patch upgrade, but replace the newer binary with the older binary.

Perform a major-version upgrade

To perform a major upgrade:

One node at a time:

- Replace the

cockroachbinary on the node with the binary for the new patch release. For containerized workloads, update the deployment to use the image for the new patch release. - Restart CockroachDB on the node. For containerized workloads, use your orchestration framework to restart the

cockroachprocess in the node's container. Ensure that the node is ready to accept a SQL connection. Unless there are tens of thousands of ranges on the node, this takes less than a minute.

To be certain that the node is ready, run the following command to connect to the cluster and run a test query.

cockroach sql -e 'select 1'

- Replace the

If auto-finalization is enabled (the default), finalization begins as soon as the last node rejoins the cluster with the new binary. When finalization finishes, the upgrade is complete.

If auto-finalization is disabled, follow your organization's testing procedures to decide whether to finalize the upgrade or roll back the upgrade. After finalization begins, you can no longer roll back to the cluster's previous major version.

Finalize a major-version upgrade manually

To finalize a major-version upgrade:

Connect to the cluster using the SQL shell:

cockroach sqlRun the following command. Replace

{VERSION}with the new major version, such as26.1.SET CLUSTER SETTING version = '{VERSION}';A series of migration jobs runs to enable certain types of features and changes in the new major version that cannot be rolled back. These include changes to system schemas, indexes, and descriptors, and enabling certain types of improvements and new features. Until the upgrade is finalized, these features and functions will not be available and the command

SHOW CLUSTER SETTING versionwill return the previous version.You can monitor the process of the migration in the DB Console Jobs page. Migration jobs have names in the format

26.1-{migration-id}. If a migration job fails or stalls, Cockroach Labs can use the migration ID to help diagnose and troubleshoot the problem. Each major version has different migration jobs with different IDs.The amount of time required for finalization depends on the amount of data in the cluster, because finalization runs various internal maintenance and migration tasks. During this time, the cluster will experience a small amount of additional load.

Note:Finalization is not complete until all schema change jobs reach a terminal state. Finalization can take as long as the longest-running schema change.

When all migration jobs have completed, the upgrade is complete.

To confirm that finalization has completed, check the cluster version:

> SHOW CLUSTER SETTING version;If the cluster continues to report that it is on the previous version, finalization has not completed. If auto-finalization is enabled but finalization has not completed, check for the existence of decommissioning nodes where decommission has stalled. In most cases, issuing the

decommissioncommand again resolves the issue. If you have trouble upgrading, contact Support.

Roll back a major-version upgrade

To roll back a major-version upgrade that has not been finalized:

- Follow the steps to perform a major-version upgrade, replacing the

cockroachbinary on each node with binary for the previous major version.

Rollbacks do not require finalization.

Disable auto-finalization

By default, auto-finalization is enabled, and a major-version upgrade is finalized when all nodes have rejoined the cluster using the new cockroach binary. This means that by default, a major-version upgrade cannot be rolled back. Instead, you must restore the cluster to the previous version.

To disable auto-finalization:

Connect to the cluster using the SQL shell:

kubectl exec -it cockroachdb-client-secure \ -- ./cockroach sql \ --certs-dir=/cockroach-certs \ --host=cockroachdb-publicSet the cluster setting

cluster.auto_upgrade.enabledtofalse.

Now, to complete a major-version upgrade, you must manually finalize it or roll it back.

Previously, to disable automatic finalization and preserve the ability to roll back a major-version upgrade, it was required to set the cluster setting cluster.preserve_downgrade_option to the cluster's current major version before beginning the major-version upgrade, and then to unset the setting to finalize the upgrade.

We now recommend managing a cluster's finalization policy using the cluster setting cluster.auto_upgrade.enabled, which was introduced in v23.2. The setting does not need to be modified after it is initially set.

Either of these settings prevents automatic finalization.

Troubleshooting

After the upgrade has finalized (whether manually or automatically), it is no longer possible to roll back the upgrade. If you are experiencing problems, we recommend that you open a support request for assistance.

In the event of catastrophic failure or corruption, it may be necessary to restore from a backup to a new cluster running the previous major version.