When it comes to choosing a database, deployment, and configuration for your most critical workloads, you’ve got plenty of options. But how can you strike the right balance between performance, resilience, and cost? Let’s take a closer look at the important factors to assess when making these decisions.

What makes a workload mission-critical (tier 0)?

A mission-critical or tier zero workload is any database workload that your application can’t survive without. For an ecommerce company, for example, payment workloads are absolutely mission-critical – if that database goes down, sales stop and the entire company grinds to a halt.

You probably already know what your tier zero workloads are. If you’ve ever had the experience of losing one of those workloads due to an outage, their importance is probably burned into your memory.

So how can you protect them while still ensuring you get the performance you need?

Setting performance goals

Every workload is different, but if it’s critical to your business, then it’s important to spend time considering what kind of performance you need.

Below, we’ll discuss some of the most common performance factors to consider, but it’s important to remember that these don’t exist in a vacuum. Optimizing for performance in one area can result in compromises in other areas, so you’ll have to consider the big picture and prioritize accordingly.

(Note: we’ll be discussing these concepts mostly in the context of the database, since that’s the example we know best, but it’s also important to consider both performance and survival goals when architecting other areas of your application.)

Latency

Low latency is often a top priority for tier-0 workloads that serve user-facing applications, as any perceptible lag in the user experience is likely to lead to lost customers.

However, latency goals must often be balanced against consistency and resilience requirements, as problems with either of those can also lead to lost sales. It often makes sense to accept some increase in latency to ensure that your data is correct and your service remains available.

However, there is also almost certainly a limit beyond which the latency cost becomes too high. One of the challenges of performance optimization is finding what the best balance is for your specific workload.

Throughput

Throughput is likely to be of particular concern for tier-0 workloads that are spiky, with periods of high traffic that are likely to tax your application, and by extension your database.

If you need high throughput from your database, the first area to consider will be the database software itself. What kind of performance can it offer, in terms of transactions per second, at scale? Is this performance proven in real-world usage by companies that likely have larger workloads than yours?

(CockroachDB is optimized for performance at scale and is in use at companies ranging from Netflix to Fortune 50 banks. Just sayin’.)

Beyond the database software itself, it’s possible to optimize for database throughput by writing more efficient queries. Choosing a database with good observability features that can help you quickly identify inefficient queries will help with this, as can some third-party database monitoring tools.

Finally, throughput can be addressed at the hardware level. Of course, upgrading performance via hardware comes at a cost, and whether this cost increase makes economic sense will depend on the specifics of your workload.

In our 2022 Cloud Report benchmarking the top cloud instances from AWS, GCP, and Azure, we found that high-throughput storage, in particular, had an oversized impact on the total cost of operating a cloud database instance. Whether that premium is worth it will depend on your specific throughput requirements.

Consistency

For tier-0 workloads, strong consistency and serializable transactions are often a requirement because those workloads tend to deal with areas of the business where incorrect, outdated, or conflicting data could cause big problems: sales, orders, logistics, critical metadata, payments, etc.

This requirement presents a challenge to companies operating at scale, though, as using a distributed database solution can make achieving strong consistency more complicated. Next-gen distributed SQL databases exist to serve precisely this purpose, but replicating data across multiple nodes does impact latency, and the specifics of your deployment and database configuration can also impact the level of consistency your database delivers.

For example: CockroachDB offers strong consistency and serializable isolation at any scale, and extremely high availability depending on how it’s configured. However, because maintaining that consistency requires communication between the database nodes, writes on CockroachDB will incur a latency penalty when compared to what you’d expect from a non-distributed, single-instance deployment. Often, this penalty is small enough that it’s imperceptible to users, but – as in all distributed SQL databases – it is impacted by the details of your deployment. A multi-region deployment, for example, may have higher latency depending on the replication factor, as nodes across multiple regions may need to communicate to commit a write.

Scalability

Elastic, automatable scalability is a requirement for most tier-0 workloads. For smaller companies, this may be achievable through vertical scaling while maintaining a single-instance deployment, but for most firms this means horizontal scaling and the ability to quickly add or remove database nodes (either manually or via automation).

As we’ve discussed already, however, moving to a distributed database does introduce challenges when it comes to achieving your latency and consistency requirements. Here, it is common to err on the side of scalability, availability, and consistency, even though doing so does mean paying a (generally small) latency penalty.

Localizability

Some tier-0 workloads may also require data to be located in a specific geographic region, either for performance reasons or because it’s required for regulatory compliance. This is becoming increasingly common as governments around the world move to enact protections for their citizens’ most sensitive data, often requiring that such data must be stored and served from within their borders.

Achieving this kind of data localization is likely possible with almost any database, but it will require a lot of manual work to add to databases that weren’t built for it, and may introduce problems in other aspects of database performance. When data localization is a requirement, it’s generally best to stick with next-gen, cloud-native databases that were built with these sorts of multi-region deployments in mind.

RELATED

Learn about multi-region deployment with CockroachDB

Security

Performance isn’t just about getting fast and accurate data to your users. Getting it there safely also matters. Most companies have specific security requirements in place, and these may affect your options when it comes to choosing a database – a company that requires SOC 2 compliance, for example, will need to find a distributed database that has passed SOC 2 audits.

Monitoring

Finally, robust monitoring is a common requirement for any tier-0 workload, as good monitoring tools will make it easier to identify inefficiencies and potential problems before they cause real trouble. Most databases including monitoring tools, and mature databases will also surface much of that information via API to make it possible for you to monitor your database’s performance via your own bespoke systems and/or third-party monitoring tools.

Setting survival goals

Similar to the performance goals discussed above, survival goals tend to come with some trade-offs. The more replicas you make of your data, and the more locations you spread those replicas across, the more resilient your database will be. More replicas in more locations also generally means more cost, and often at least a bit more latency.

There are ways to mitigate this, but at the end of the day, a single-instance database is always going to be faster and cheaper than a multi-region database with a high replication factor. Of course, a single-instance database is also very susceptible to outages and disasters.

Application outages, even short ones, can be incredibly costly, especially for large enterprises. For example, a five-hour power outage at an operations center cost Delta $150 million in 2016. And while costs vary based on the specifics of the outage and the company affected, recent research shows that most outages – 60% – cost more than $100,000. 15% of outages cost more than $1 million. And those numbers, according to the research, are increasing.

(And needless to say, in the context of tier 0 workloads specifically, outage costs are likely to fall on the higher end of the spectrum, since losing them is likely to impact some or all of the functionality of your application.)

The question all companies must consider, then, is what their outage costs would be, and by extension what do their mission critical workloads need to be able to survive?

Node failures – an error, crash, or outage affecting a single database server – are relatively common, and surviving at least a single node failure should generally be considered table stakes for companies of significant size, at least when it comes to their tier 0 workloads.

Availability zone (AZ) failures are less common, but they do happen. Software issues are less likely to knock out an AZ, but since all of the servers associated with an AZ tend to be in close geographic proximity, they are vulnerable to natural disasters, facilities issues, and other localized events.

Region outages are even less common, but they happen, too. Companies that are all-in on a single cloud and a single region can be devastated by these outages, which can affect most or all of their cloud services, not just the servers they’re running their databases on.

Multi-region outages and full cloud outages* are certainly rare, but not unprecedented or unfathomable. Cyberattacks, large natural disasters, and wars are some factors that could potentially cause a cloud provider to lose two or more regions at once.

Generally speaking, though, most companies are interested in being able to survive multiple node failures or AZ failures. Large, multi-region enterprises also need to be able to survive region outages.

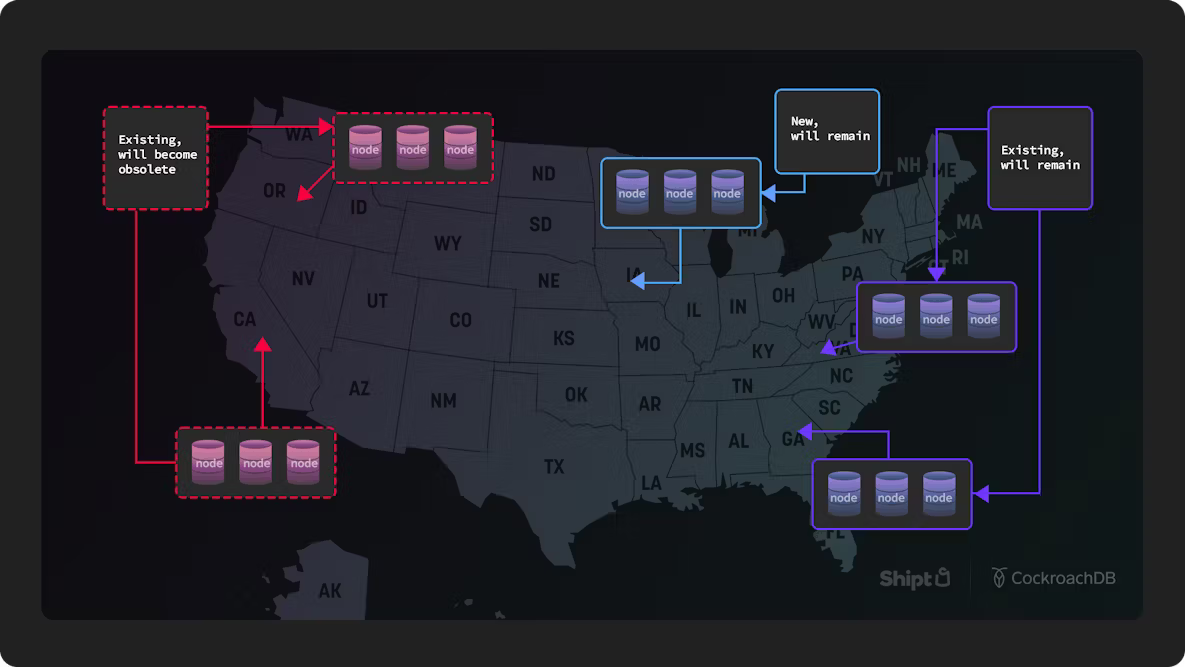

Below, let’s look at some of the specifics in terms of what’s required for the survival of different types of outages if you’re using CockroachDB.

Single-region survival goals

Multi-region survival goals

Balancing performance, resilience, and cost

As we can see, CockroachDB can offer powerful resilience, allowing you to survive multiple region failures and more. However, this type of deployment is both more expensive (15 nodes vs. 3 nodes) and may result in more write latency (replication factor of 15 vs 3) than a deployment that has the goal of surviving single node failure. (However, the increase in write latency could be offset by a decrease in read latency for some users, as multi-region deployments can be used to locate data closest to the users most likely to access it).

Whether the additional costs and latency are worth it for your workload will depend primarily on what an outage would cost you, as well as the specifics of your application and how it might be affected by higher write latencies (but potentially lower read latencies).

Unfortunately, there’s no easy answer here. Each company must strike its own balance between performance, resilience, and cost. In the context of tier 0 workloads, however, it’s common to accept at least some performance and cost penalties in return for stronger resilience, because the cost of losing a tier 0 workload tends to be tremendous.

It’s also worth considering that in some cases, moving to a more resilient database can actually result in a cost savings because it allows you to eliminate many of the inefficiencies associated with trying to operate a legacy database at scale.

Whatever conclusions you come to about the most effective balance of performance and resilience for your tier zero workloads, it’s also important to remember to validate those with testing on less critical workloads before making the shift. Validate your conclusions by running less critical workloads on your new setup before (very carefully) moving the tier zero stuff over.