At Cockroach Labs, we write quite a bit about consensus algorithms. They are a critical component of CockroachDB and we rely on them in the lower layers of our transactional, scalable, distributed key-value store. In fact, large clusters can contain tens of thousands of consensus groups because in CockroachDB, every Range (similar to a shard) is an independent consensus group. Under the hood, we run a large number of instances of Raft (a consensus algorithm), which has come with interesting engineering challenges. This post dives into one that we’ve tackled recently: adding support for atomic replication changes (“Joint Quorums”) to etcd/raft and using them in CockroachDB to improve resilience against region failures.

A replication change is a configuration change of a Range, that is, a change in where the consistent copies of that Range should be stored. Let’s use a standard deployment topology to illustrate this.

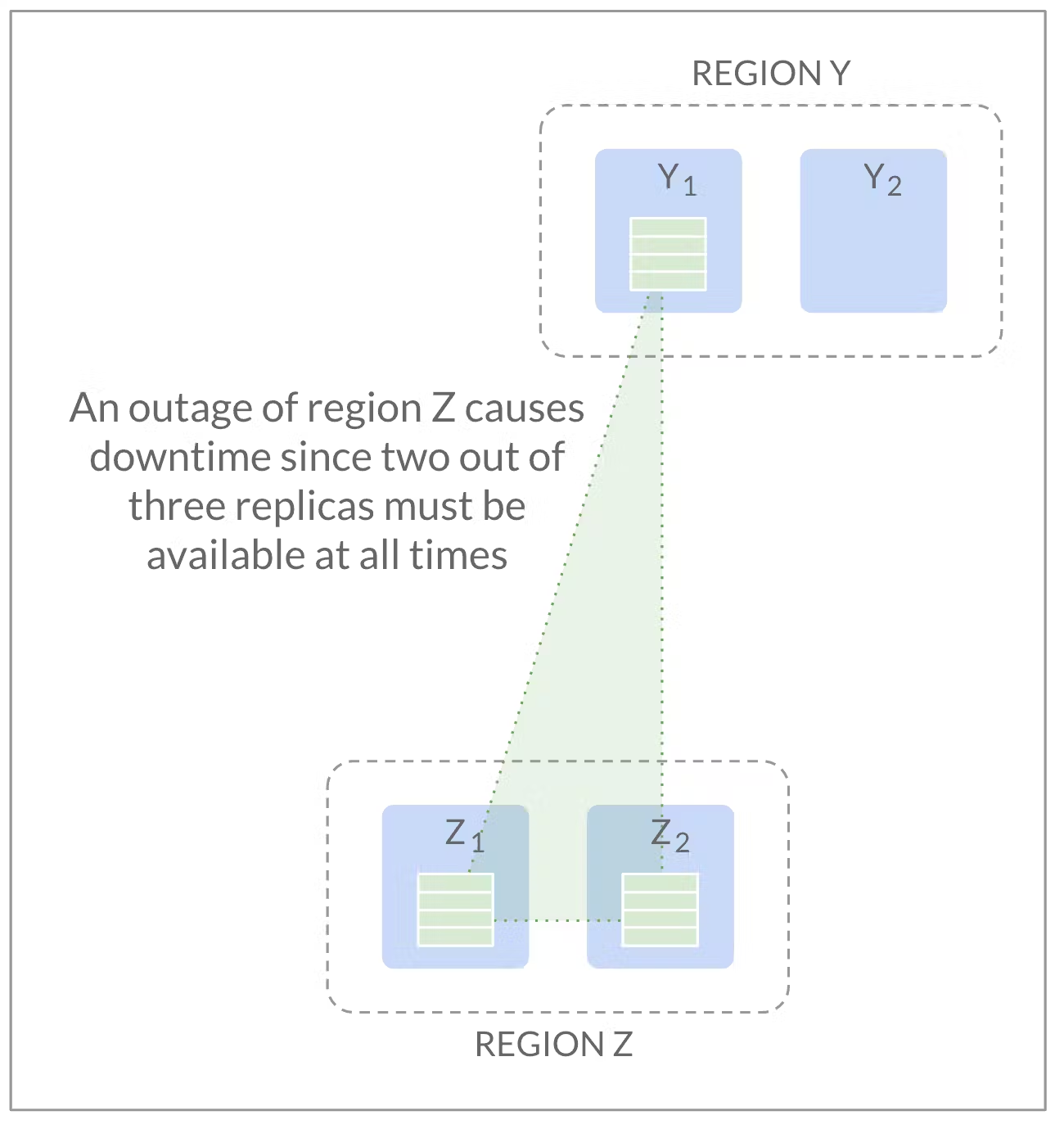

The above deployment has three regions (i.e. data centers). CockroachDB enables globally deployed applications, so these regions may well be placed across the globe. We see that there are two nodes in each of the regions X, Y and Z, and we see a Range which has one replica (“copy”) in each of the regions. This deployment survives the failure of a single region: consensus replication will continue to work as long as a majority of replicas are available. If a region fails, we lose at most one replica and two - a majority - remain, so the database will continue to operate normally after a short timeout. If we placed two replicas in, say, Z and the third replica in Y, a failure of region X would take two of the replicas with it, leaving only a single replica available; this single survivor would not be able to serve requests:

CockroachDB dynamically adjusts the data placement to account for shifts in node utilization. As a result, it may want to laterally move the replica from one node to another within, say, region X. To make it concrete, let’s say we want to move it from X2 to X1. We may want to do this because the operator has specified that X2 should go down for maintenance, or X2 has much higher CPU usage than X1.

As the diagram shows, the Raft group under consideration is initially located on X2,Y1,Z2. The active configuration of this group will be the majority configuration X2,Y1,Z2, meaning two out of these three are required to make a decision (such as electing a leader, or committing a log entry).

We want to laterally move from X2 to X1, that is, we’d like to end up with replicas on X1,Y1, and Z2in the corresponding configuration (X1,Y1,Z2). But how does that actually happen?

In Raft, a configuration change is initiated by proposing a special log entry which, when received by a replica, switches it over to the new configuration. Since the replicas forming the Range receive this command at different points in time, they do not switch over in a coordinated fashion and care must be taken to avoid the dreaded “split brain” scenario, in which two distinct groups of peers both think they have the right to make decisions. Here’s how this could happen in our particular example:

Y1is the first node to receive the configuration change in its log, and it immediately switches to C2=X1, Y1, Z2. It catches up X1, which consequently also switches to C2. X1 and Y1 form a quorum of C2, so they can now append log entries without consulting with either X2 or Z2. But - both X2 and Z2 are still using C1=(X2, Y1, Z2) and have no idea a new configuration is active elsewhere. If they happen to not learn about this in a timely manner from Y1- imagine a well-placed network disruption - they might decide to elect a leader between themselves and may start appending their own entries at conflicting log positions - split brain. Membership changes are tricky!

One way to look at the above is that we were trying to “change too much at once”: we effectively added a node (X1) and removed a node (X2) at the same time. Maybe things would turn out OK if we carried these changes out individually, waiting for a majority to have received the first change before starting the second?

It turns out that this is true. Let’s add X1 first before removing X2. This means that in the above illustration we’ll have C2=(X1, X2, Y1, Z2). Note that now that there are four nodes, there are three nodes required for majority consensus. This means that when X1and Y1have both switched to the new configuration, they can’t make their own decisions just yet - they need to loop in one additional peer (and tell it about C2), that is, either X2or Z2. Whichever one they pick effectively leaves a single replica using C1, but without a friend to form a separate quorum with. Similarly, we can convince ourselves that removing one node at a time is safe, too.

Breaking complex configuration changes such as lateral moves into “safer” individual parts is how CockroachDB worked for a long time. However, does it work with our deployment above? Let’s take another look at the intermediate state we’re in after adding X1, but before removing X2 (similar problems occur if we remove X2 first, then add X1):

Remember how we realized earlier that by placing two replicas in a single region, we could run into trouble? This is exactly what we were forced to do here. If region X fails, we’re in trouble: we lose two replicas at once, leaving two survivors unable to muster up the third replica required for a healthy majority (recall that a group of size four needs three replicas to make progress). As a result, this Range will stop accepting traffic until region X comes back up - violating the guarantees we were expecting from this deployment topology. We might try to argue that configuration changes are rare enough to make this a non-issue, but we’ve found that this does not hold. A CockroachDB cluster maintains many thousands of Ranges; at any given time, there might be a configuration change going on on some Range. But even without taking that into account, compromising availability in ways not transparent to the user is deeply unsatisfying to us.

Until recently, CockroachDB mitigated this problem by carrying out the two adjacent membership changes as fast as possible, to minimize the time spent in the vulnerable configuration. However, it was clear that we couldn’t accept this state of affairs permanently and set out to address the issue in the 19.2 release of CockroachDB.

The solution to our problem is outlined in the dissertation in which the Raft consensus algorithm was first introduced, and is named Joint Consensus. The idea is to pick a better intermediate configuration than we did in our example above - one that doesn’t force us to put two replicas into a single region.

What if our intermediate configuration instead “joined” the initial and final configuration together, requiring agreement of both? This is exactly what Joint Consensus does. Sticking to our example, we would go from our initial configuration C1=X2,Y1,Z2 to the “joint configuration” C1 && C2= (X2,Y,Z2) && (X1,Y1,Z2):

In this configuration, making a decision requires agreement of a majority of C1 as well as a majority of C2. Revisiting our earlier counter-example in which X2 and Z2 had not received the old configuration yet, we find that the split-brain is impossible: X1 and Y1(who are using the joint configuration) can’t make a decision without contacting either X2 or Z2, preventing split-brain. At the same time, the joint configuration survives a region outage just fine, since both C1and C2 do so individually!

Hence, the plan was clear: implement joint configuration changes, and use them. This provided a welcome opportunity to contribute back to the community, as we share a Raft implementation with the etcd project. etcd is a distributed key-value store commonly used for configuration management (notably, it backs Kubernetes), and we’ve been an active maintainer (and user) of its etcd/raft library well before Cockroach Labs even sprung into existence in 2015.

At this point, it’s time for a juicy confession:

etcd/raft doesn’t actually really implement the Raft consensus algorithm.

It does closely follow the specification for the most part, but with one marked difference: configuration changes. We’ve explained above that in Raft, a peer should switch to the new configuration the moment it is appended to its log. In etcd/raft, the peer switches to the new configuration the moment is committed and applied to the state machine.

The difference may seem small, but it carries weight. Briefly put,

the “Raft way” is proven correct in the paper, but more awkward use from the app, while

the “etcd/raft way” comes with subtle problems that require subtle fixes, but has a more natural external API.

We took the opportunity to discuss with the other maintainers whether etcd/raft should fall in line with the spec. In the process, we uncovered some previously unknown potential correctness problems. A little later, Peng Qu over from PingCap (they’re using a Rust implementation of Raft very similar to etcd/raft) alerted us to yet another problem.

After we found and implemented solutions for both problems, we arrived at a good understanding about the additional invariants that truly make etcd/raft’s approach safe. At this point, neither we nor the maintainer community felt that changing to the “Raft way” now provided a good return on what would have been a very large investment in etcd/raft and all of its implementers (!). In this particular case, it seemed better to be more complicated internally, remain easy to use externally (though with a wart or two), while keeping the battle-tested code we had in place mostly intact.

With this detour out of the way, we went ahead and implemented joint configuration changes. Now, a few months and 22 pull requests later, anyone using etcd/raft can enjoy the well-maintained fruits of our work. Additionally, we added datadriven testing machinery that significantly simplifies testing complex interactions within Raft peers (see here for a sample). This significantly simplifies testing and provides fertile grounds for future work or even just explorations.

Naturally we also started using this new functionality in CockroachDB’s recent 19.2 release. If you haven’t given us a try yet, it’s easy to do so either locally or in the cloud.