In a multi-region deployment, the follower reads pattern is a good choice for tables with the following requirements:

- Read latency must be low, but write latency can be higher.

- Reads can be historical (4.8 seconds or more in the past).

- Rows in the table, and all latency-sensitive queries, cannot be tied to specific geographies (e.g., a reference table).

- Table data must remain available during a region failure.

This pattern is compatible with all of the other multi-region patterns except Geo-Partitioned Replicas. However, if reads from a table must be exactly up-to-date, use the Duplicate Indexes or Geo-Partitioned Leaseholders pattern instead. Up-to-date reads are required by tables referenced by foreign keys, for example.

Prerequisites

Fundamentals

- Multi-region topology patterns are almost always table-specific.

- Review how data is replicated and distributed across a cluster, and how this affects performance. It is especially important to understand the concept of the "leaseholder". For a summary, see Reads and Writes in CockroachDB. For a deeper dive, see the CockroachDB Architecture documentation.

- Review the concept of locality, which makes CockroachDB aware of the location of nodes and able to intelligently place and balance data based on how you define replication controls.

- Review the recommendations and requirements in our Production Checklist.

- This topology doesn't account for hardware specifications, so be sure to follow our hardware recommendations and perform a POC to size hardware for your use case.

- Adopt relevant SQL Best Practices to ensure optimal performance.

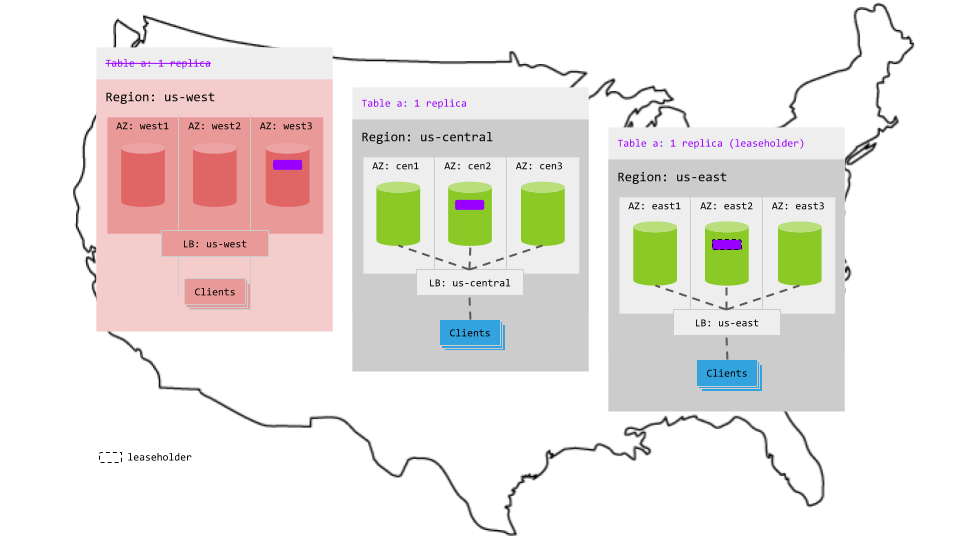

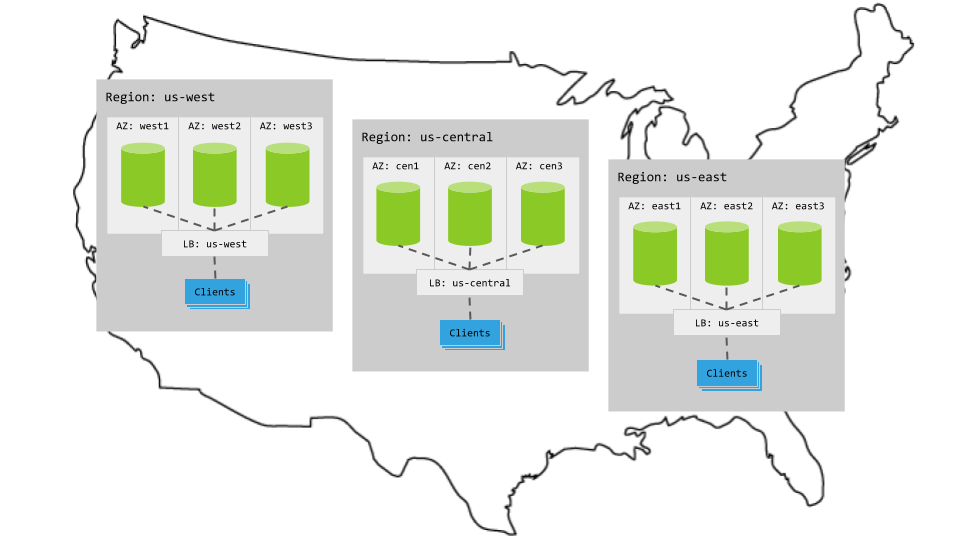

Cluster setup

Each multi-region topology pattern assumes the following setup:

Hardware

3 regions

Per region, 3+ AZs with 3+ VMs evenly distributed across them

Region-specific app instances and load balancers

- Each load balancer redirects to CockroachDB nodes in its region.

- When CockroachDB nodes are unavailable in a region, the load balancer redirects to nodes in other regions.

Cluster

Each node is started with the --locality flag specifying its region and AZ combination. For example, the following command starts a node in the west1 AZ of the us-west region:

$ cockroach start \

--locality=region=us-west,zone=west1 \

--certs-dir=certs \

--advertise-addr=<node1 internal address> \

--join=<node1 internal address>:26257,<node2 internal address>:26257,<node3 internal address>:26257 \

--cache=.25 \

--max-sql-memory=.25 \

--background

Configuration

Follower reads requires an Enterprise license.

Summary

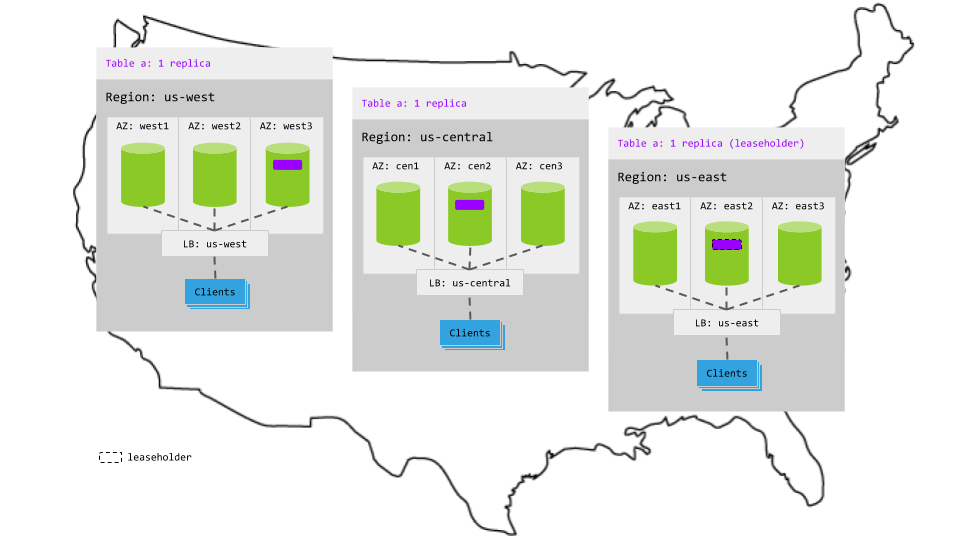

Using this pattern, you configure your application to use the follower reads feature by adding an AS OF SYSTEM TIME clause when reading from the table. This tells CockroachDB to read slightly historical data from the closest replica so as to avoid being routed to the leaseholder, which may be in an entirely different region. Writes, however, will still leave the region to get consensus for the table.

Steps

Assuming you have a cluster deployed across three regions and a table like the following:

> CREATE TABLE postal_codes (

id INT PRIMARY KEY,

code STRING

);

Insert some data:

> INSERT INTO postal_codes (ID, code) VALUES (1, '10001'), (2, '10002'), (3, '10003'), (4,'60601'), (5,'60602'), (6,'60603'), (7,'90001'), (8,'90002'), (9,'90003');

If you do not already have one, request a trial Enterprise license.

Configure your app to use

AS OF SYSTEM TIME experimental_follower_read_timestamp()whenever reading from the table:Note:The

experimental_follower_read_timestamp()function will set theAS OF SYSTEM TIMEvalue to the minimum required for follower reads.> SELECT code FROM postal_codes AS OF SYSTEM TIME experimental_follower_read_timestamp() WHERE id = 5;Alternately, instead of modifying individual read queries on the table, you can set the

AS OF SYSTEM TIMEvalue for all operations in a read-only transaction:> BEGIN; SET TRANSACTION AS OF SYSTEM TIME experimental_follower_read_timestamp(); SELECT code FROM postal_codes WHERE id = 5; SELECT code FROM postal_codes WHERE id = 6; COMMIT;

Using the SET TRANSACTION statement as shown in the example above will make it easier to use the follower reads feature from drivers and ORMs.

Characteristics

Latency

Reads

Reads retrieve historical data from the closest replica and, therefore, never leave the region. This makes read latency very low but slightly stale.

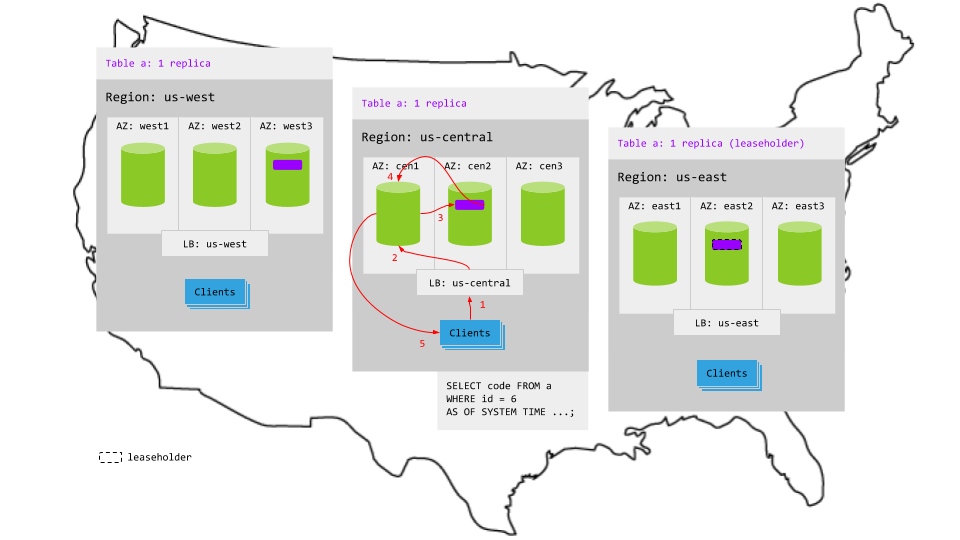

For example, in the animation below:

- The read request in

us-centralreaches the regional load balancer. - The load balancer routes the request to a gateway node.

- The gateway node routes the request to the closest replica for the table. In this case, the replica is not the leaseholder.

- The replica retrieves the results as of 4.8 seconds in the past and returns to the gateway node.

- The gateway node returns the results to the client.

Writes

The replicas for the table are spread across all 3 regions, so writes involve multiple network hops across regions to achieve consensus. This increases write latency significantly.

For example, in the animation below:

- The write request in

us-centralreaches the regional load balancer. - The load balancer routes the request to a gateway node.

- The gateway node routes the request to the leaseholder replica for the table in

us-east. - Once the leaseholder has appended the write to its Raft log, it notifies its follower replicas.

- As soon as one follower has appended the write to its Raft log (and thus a majority of replicas agree based on identical Raft logs), it notifies the leaseholder and the write is committed on the agreeing replicas.

- The leaseholder then returns acknowledgement of the commit to the gateway node.

- The gateway node returns the acknowledgement to the client.

Resiliency

Because this pattern balances the replicas for the table across regions, one entire region can fail without interrupting access to the table: