AI agents, always-on workloads, and the architectural limits of infrastructure designed for human traffic

Disclaimer: Data from this blog comes from the State of AI Scale & Resilience Survey, which was conducted by Cockroach Labs and Wakefield Research with 1,125 Senior Cloud Architects, Engineering, & Technology Executives responding, with a minimum seniority of Director in 3 regions across 11 markets: North America (U.S., Canada), EMEA (Germany, Italy, France, UK, Israel), and APAC (India, Australia, Singapore, Japan), between December 5th and December 16th, 2025, using an email invitation and an online survey. Note, results of any sample are subject to sampling variation.

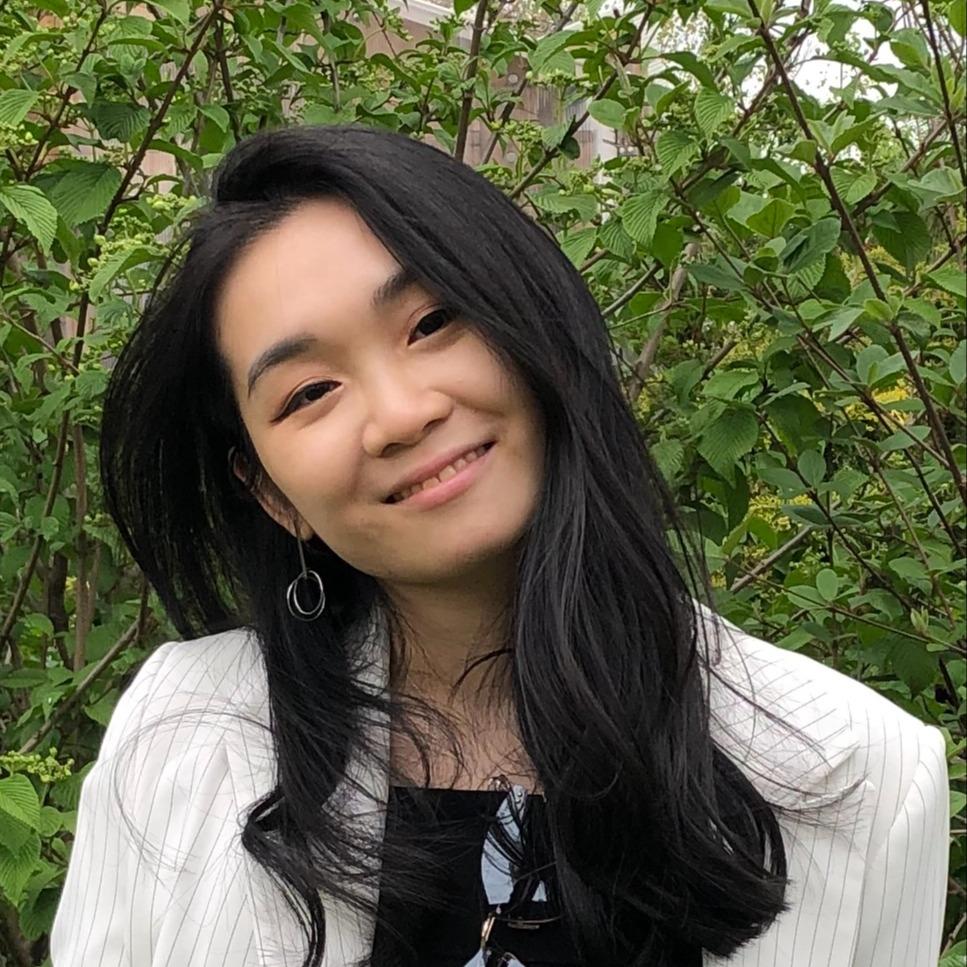

83% of technology leaders believe their infrastructure will fail under AI pressure within two years.

That number is not meant to provoke alarm. It’s simply a fact that reflects the forward-looking judgment of 1,125 global engineering and technology leaders who are already seeing stress where their systems were never designed to carry it.

AI did not simply add more requests to existing platforms. It changes who generates load, how frequently that load appears, and how predictable it is. Instead of people clicking buttons during business hours, traffic is poised to come increasingly from machines. Agents, copilots, and background processes operate 24/7, continuously, across regions, without pauses or quiet periods.

AI is not a temporary spike. If leaders expect infrastructure failure this soon, the problem is not experimentation or immaturity. It is the architecture underneath these workloads.

The State of AI Infrastructure 2026

Explore how 1,125 engineering leaders are rethinking infrastructure for the AI era.

AI adoption is scaling faster than any technology before it

This moment looks nothing like previous platform shifts.

Consumer adoption of AI is moving faster than the internet did, faster than smartphones did, and faster than social platforms ever managed. AI tools are no longer niche enhancements. They are becoming default interfaces for work, support, creation, and decision making.

What matters more than adoption speed is how AI is used. AI systems do not just operate in bursts. They run continuously serving global demand by default. And their activity is machine-driven rather than paced by human behavior.

Earlier technology waves scaled the number of users. AI will scale the number of system interactions. 100% of survey respondents expect AI workloads to grow in the next year, and that growth compounds. One request often fans out into thousands of downstream operations that must coordinate in real time. In financial services, that means real-time fraud scoring and risk evaluation layered on top of core transactional systems. In retail, it means personalization and inventory coordination running continuously across global storefronts.

The hidden cost of AI scale is coordination

Most discussions about AI infrastructure focus on models and training pipelines. That is where budgets are visible. The real strain shows up somewhere else.

AI workloads introduce continuous pressure. Systems that once had natural idle periods now run without pause. Concurrency rises sharply as agents and real-time inference operate in parallel. Read and write patterns become harder to predict. Services are constantly coordinating with one another in the background.

All of that pressure lands on the data layer. Databases are expected to remain consistent, available, correct, and fast under sustained transactional load, even as demand shifts unpredictably. There are no quiet windows to recover or rebalance.

AI does not break systems by causing dramatic spikes. It breaks them by never stopping.

The economics of AI infrastructure are changing. For the past decade, total cost of ownership was dominated by compute, storage, and network spend. Those costs are visible and easy to model. AI changes that equation.

Once systems run continuously under machine-driven demand, the real cost driver becomes coordination. Retries. Contention. Partial failures. Recovery overhead. Human intervention. These costs do not show up as line items on a cloud invoice. They show up as degraded performance, cascading incidents, and lost customer trust.

As AI becomes embedded in revenue-generating systems, the cost of keeping systems consistent and available begins to outweigh raw hardware spend.

This is the shift many leaders are starting to see.

Infrastructure failure is an architectural problem, not an AI experiment gone wrong

The timelines leaders are projecting are uncomfortably short. More than a third (34%) expect infrastructure failure in less than a year, and nearly a third (30%) believe the database will be the first layer to fail.

This is not about teams moving too quickly with AI. It is about long-standing assumptions colliding with machine-scale reality. Many systems were built on the idea that traffic comes in waves, that humans are the primary drivers, that downtime can be scheduled, and that failure is an exception.

Those assumptions no longer hold. Adding replicas, increasing instance sizes, or layering on tooling may buy time, but it does not change the underlying failure modes. You cannot patch your way out of an architecture that was designed for a different era.

AI scale demands redesign.

Why the database layer has become the breaking point

Elastic infrastructure helps, but only to a point. As systems scale, coordination becomes the limiting factor.

AI workloads increase transactional pressure, not just storage requirements. Latency matters more than ever, and partial failures are often more damaging than full outages because they ripple through automated workflows. Small delays can cascade quickly when machines are driving demand.

Databases designed for single-region deployments or predictable growth struggle under sustained, distributed load. Replication lag, contention, and complex failover behavior surface faster when coordination is constant.

Once AI moves into production, the database is no longer just working in the background. It defines the limits of the entire system and becomes the constraint. When the database hesitates, the entire system hesitates. When it degrades, the degradation multiplies across automated workflows that do not pause to recover.

What AI-ready infrastructure actually requires

Being ready for AI is not about checking off features. It is about adopting an architectural posture that assumes continuous, unpredictable demand.

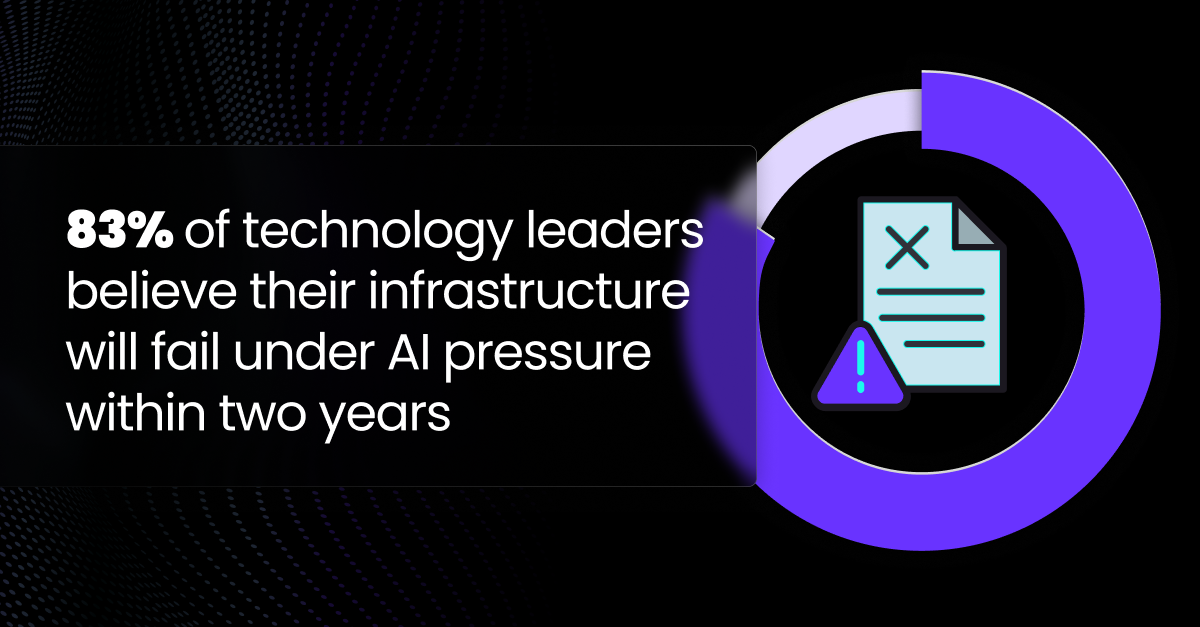

AI-ready systems are distributed by default rather than retrofitted later. They support multi-active operation across regions so there is no single point of write traffic or failure. They preserve strong consistency even under high concurrency, and they handle failure automatically without requiring human intervention. They are not architecturally tied to a single region or provider. Diversity in infrastructure is not redundancy for its own sake. It is survivability in an environment where regulatory, geopolitical, and economic conditions can shift quickly.

Just as importantly, they operate without scheduled downtime. Maintenance windows and planned outages are incompatible with systems that never sleep.

These requirements are no longer theoretical. They are becoming the baseline for any platform expected to support production AI.

Why distributed SQL fits the AI era

AI-native systems need a system of record that can grow with success rather than constrain it.

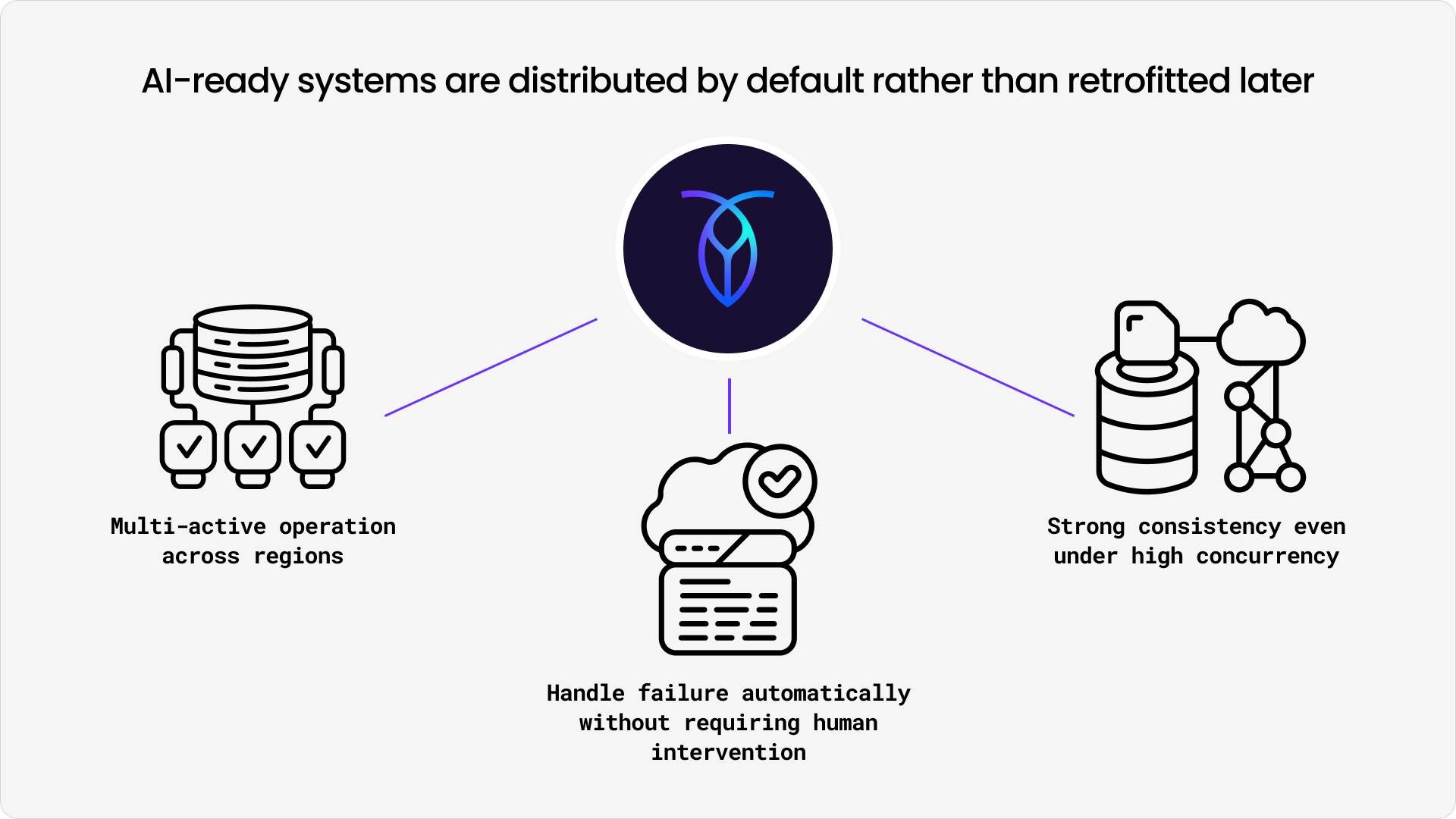

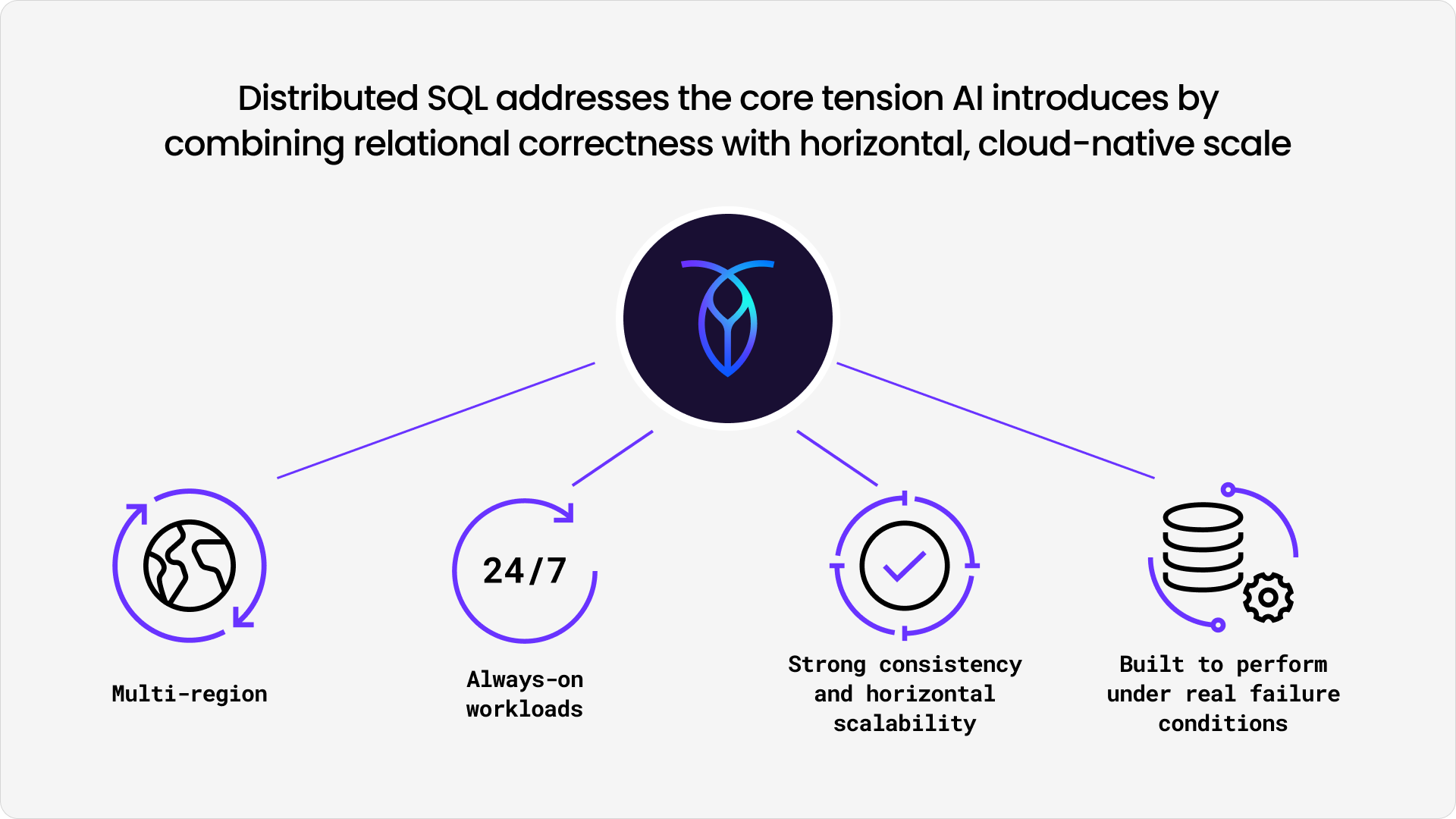

Distributed SQL addresses the core tension AI introduces by combining relational correctness with horizontal, cloud-native scale. It treats coordination, consistency, and availability as foundational concerns instead of tradeoffs that teams must manage themselves.

CockroachDB fits into this category by design. It supports multi-region, always-on workloads with strong consistency and horizontal scalability, and it is built to perform under real failure conditions rather than idealized benchmarks.

The goal is not to add complexity. It is to make the database disappear into the background so teams can focus on building and operating AI systems with confidence.

AI will scale whether your infrastructure is ready or not

By 2027, the difference will not be who adopted AI first. It will be whose systems hold up.

AI scale is inevitable, and the window for architectural decisions is closing quickly. The choices organizations make now will determine whether AI becomes a durable advantage or an ongoing source of risk.