Every season, Black Friday and Cyber Monday are like the Superbowl for retailers and 2024 will be no different. Instead of point spreads, e-commerce companies are betting on peak sales numbers with many retailers reporting that 30% of their yearly sales will come from this time of year.

But while the deluge of deals evokes dreams of dollars for CEOs, the surging site traffic can be more of a nightmare for CTOs. The onslaught of shoppers puts huge amounts of pressure on their application architecture. In these scenarios, even a minor problem with inventory management can cause major consequences. (DoorDash published a useful blog about how they navigate the challenge of real-time inventory level changes with changefeeds.)

How Black Friday stresses inventory management systems

On every day but particularly on Black Friday, backend inventory systems have to deliver on four fundamental requirements:

1. Ability to scale, flexibly and responsively

Inventory management systems must be able to meet the increased flood of both reads and writes that will come in over the Black Friday period, as shoppers view items, add them to carts, and purchase them.

While companies can attempt to estimate the demand beforehand and provision hardware resources accordingly, all the capacity planning in the universe can’t expect the unexpected. One of your products could get unexpectedly hot, or a competitor’s outage could drive extra customers to your site. The inventory management system needs to be able to function in real-time regardless of load, which generally means that it needs to be architected in such a way that additional resources can be provisioned quickly and easily.

In practice, this generally means embracing a distributed, container-based cloud architecture so that new services can be quickly spun up whenever the load on the system increases. And, just as importantly, spun back down when the spike passes.

2. High availability

If any part of the inventory management system goes offline during Black Friday, the financial consequences could be massive.

The most obvious problem is all of the lost sales that occur when customers hit your product page only to find a 404. Worse, however, is when some inventory management services go down while others are still working — allowing customers to purchase products that aren’t actually in stock. Beyond losing that sale, you also risk losing that customer forever.

The goal of retailers, on Black Friday as on all days, is to sell to zero but not beyond zero. If the wrong part of the system goes down (or simply gets bogged down) at the wrong time, selling beyond zero can become a possibility. Placating angry customers who’ve paid for products you don’t have can ultimately cost even more than the lost revenue.

3. Lowest possible latency

Inventory management services on the backend need to be able to answer queries quickly. Research shows that online shoppers typically give an application six seconds to respond before hopping off to another site.

For enterprise retailers, this typically requires a multi-region architecture so that key data and service instances can be located close to the customers accessing them. Your UK-based customers, for example, will have a much snappier shopping experience if their page views and purchases are querying inventory management services located in the UK, rather than make the round trip to a server in California.

4. Consistency

Every aspect of the application, from source-of-record database to user-facing application services, has to agree on how much inventory exists for each product, and where that inventory is located. Any kind of inconsistency can lead to the dreaded scenario of selling beyond zero, or a variety of other problems that create poor customer experience, such as products still being listed as in stock on the product page when the database knows they’re actually sold out.

Achieving consistency in a small-scale system is easy: you spin up a Postgres database somewhere and have all of your services query that. But at scale, consistency becomes quite complicated. The previous three requirements in this list suggest that inventory management systems should be cloud-native, distributed multi-region systems. How can you keep a single-source-of-truth database when separate regions of your application are processing huge numbers of transactions all at the same time?

Black Friday-ready inventory management architecture

While there’s no way of making Black Friday easy. However architecting your inventory management system in the right way can make achieving scale, high availability, low latency, and consistency much more attainable.

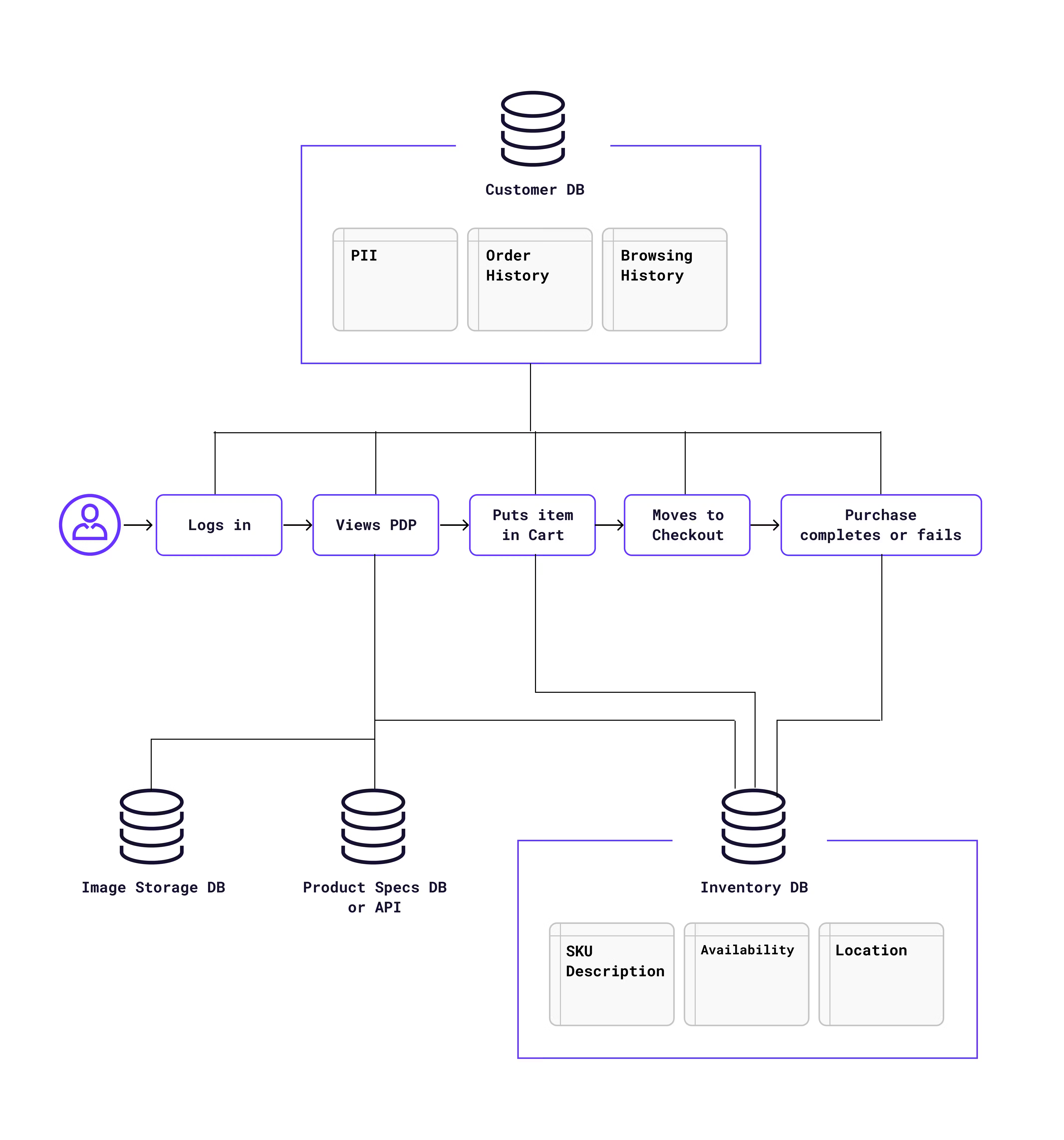

Let’s look at the backend databases that are typically called by application services as a user goes through the steps of making a purchase:

As we can see, a single purchase could potentially hit the inventory database at least three times:

A read when the user loads the product page, to see if the item they’re looking at is in stock, and potentially also where it’s located.

(Often) a write when the user adds the item to their cart to mark that the item isn’t currently available, although it is still in stock.

A write when the item is purchased or abandoned to permanently update the database on its status.

Needless to say, having each user in a Black Friday traffic tsunami querying your inventory database three times per transaction is a recipe for disaster.

So if we’re building a containerized, distributed, multi-region inventory management system, we now have two significant hurdles to overcome:

How can we keep a source-of-truth distributed, multi-region database consistent?

How can we keep application services up-to-date with correct information from that database without constantly querying it or risking serving users outdated information from a cache?

Not so long ago, there wasn’t really a good answer to these questions, because relational databases – which can enforce consistency with ACID transactional guarantees – weren’t cloud-native, distributed, or easy to scale.

Companies were forced to either grapple with the technical complexity of trying to scale legacy SQL databases manually via sharding (which is time-consuming and operationally complex) or embrace the compromises inherent in NoSQL databases (which are easier to scale but can offer only eventual consistency). Neither of these options is ideal, and both have the potential to lead to Black Friday disasters such as…

…not being able to keep up with unexpected demand because sharding a legacy SQL database isn’t quick or easy.

…selling past zero because the eventual consistency of NoSQL isn’t fast enough to keep up with the pace of customer purchases.

Today, thankfully, there are better options. Modern, distributed SQL databases such as CockroachDB offer the ironclad transactional consistency of legacy SQL databases together with the cloud-native, multi-region scalability of NoSQL databases. And since every CockroachDB database can be treated as a single logical database by the application, there’s less operational complexity: your application can treat CockroachDB exactly like it would treat a single Postgres instance, and CockroachDB will handle the complexity of maintaining consistency across a multi-region distributed setup all by itself.

So, choosing the right tooling has solved our first question: how can we keep a source-of-truth distributed, multi-region database consistent? But the second one remains unanswered: how can we keep application services up-to-date with correct information, while not overloading the database?

The most common solution to that problem is changefeeds, and here too, it turns out that choosing the right tool for the job can make things easier.

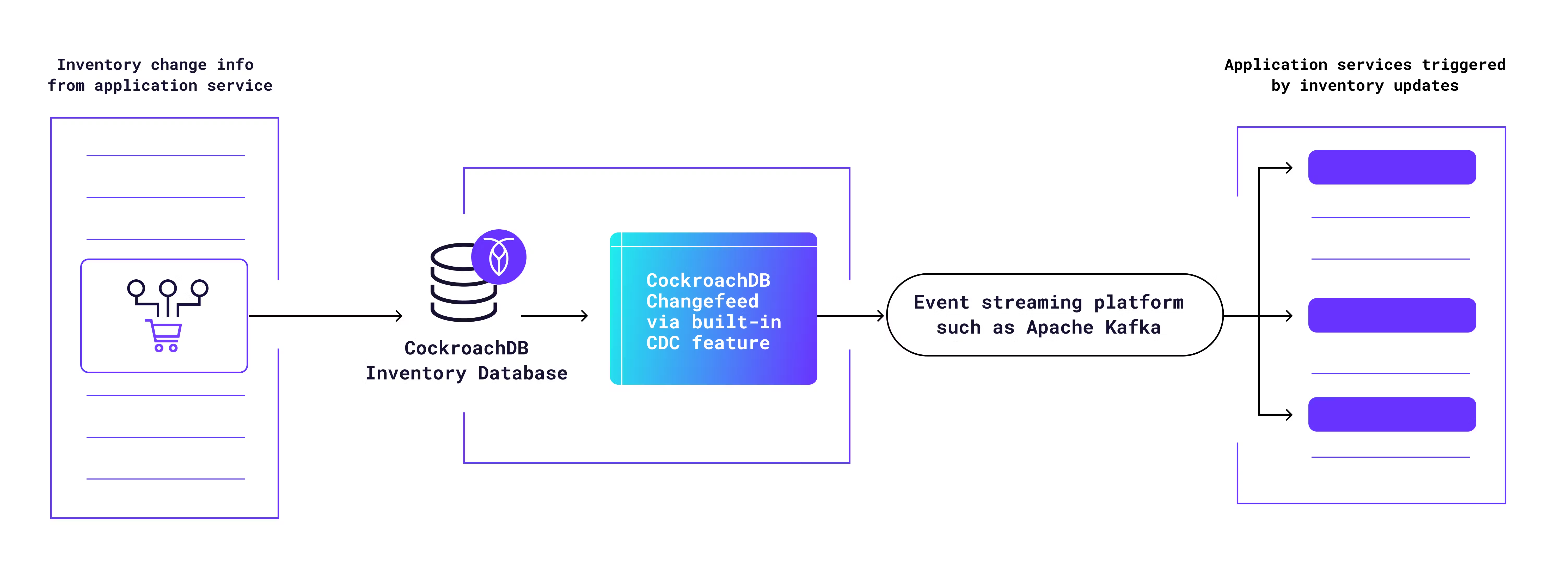

It’s possible to build a changefeed into your SQL database manually using the transactional outbox pattern, but modern databases often come with this sort of service built-in. CockroachDB’s built-in Change Data Capture feature, for example, allows you to easily send a changefeed to an event streaming platform such as Kafka.

For example, the diagram below is based on the real architecture of a major online retailer, and illustrates how they keep application services up-to-date with inventory status without constantly querying the inventory database itself. The CDC feed from their CockroachDB database is fed to Kafka, which then serves the variety of application services that are triggered by inventory changes.

For example, imagine it’s Black Friday and 100 TVs are available in your online shop. A customer buys one, and the inventory database is updated to 99. This update is sent via a changefeed to Kafka, and an application service is then triggered to update the product page cache so that the next time a user loads the product page, it reads 99 instead of 100 without having to query the inventory DB.

Unfortunately, there’s no way to make Black Friday easy on your application. Even with the best backend tools and impeccable architecture, the loads Black Friday can bring are always likely to be a source of stress. But building an inventory system that can deliver scale, availability, low latency, and consistency can certainly make things easier.

Learn more about how CockroachDB is powering retail and ecommerce applications at massive scale here, or feel free to get in touch with our team here to see how we can help.