\* *This blog from 2018 does not represent the most recent strategies for pinning data to locations at the row level in CockroachDB. And certain capabilities referenced, like interleaving, are no longer supported. This documentation about our multi-region capabilities is the best place to begin learning about the current best practices for scaling your database across multiple regions: https://www.cockroachlabs.com/docs/stable/multiregion-overview* ``` As we’ve written about previously, geographically distributed databases like CockroachDB offer a number of benefits including reliability, security, and cost-effective deployments. You shouldn’t have to sacrifice these upsides to realize impressive throughput and low latencies.

``` $ echo "constraints: {'+continent=asia': 2}" | ./cockroach zone set --insecure roachmart.users.asia -f - CockroachDB configures each node at startup with hierarchical information about locality. For Roachmart, the gateway node’s continent is Asia, the region is asia-east-1 and the zone is asia-east1-a (public clouds don't expose rack information, but a private datacenter would likely include this in the locality flag) while the leaseholder replica is located in the region asia-northeast1 and zone asia-northeast1-a . These localities are used as targets of replication zone configurations, but they're also used by the system to keep diversity as high as possible.

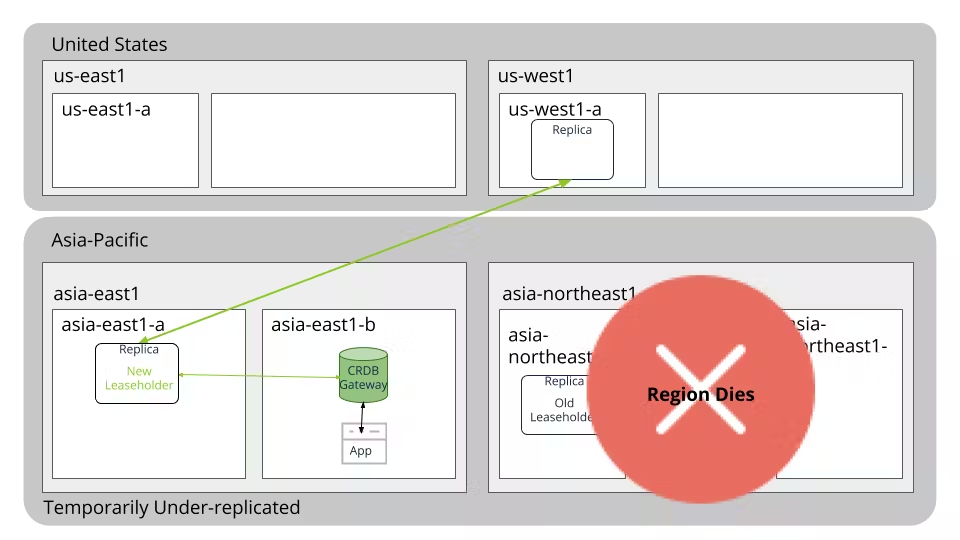

Roachmart requires that two replicas remain in Asia to ensure low latency reads and writes. CockroachDB will automatically attempt to keep the third (and final) replica in a different region (in this case us-west) to ensure maximum diversity. This replica (located in us-west1-a) will only return to Asia in the event of a region specific failure such as unavailable or overloaded nodes.

CockroachDB will keep the two replicas within Asia in as diverse geographic regions as possible to increase survivability. For example, if there are nodes in two regions, CockroachDB will keep one of these two replicas in each. If there is only one region (either by design or due to temporary region unavailability), CockroachDB will automatically spread replicas across the zones in it to maximize survivability.

Consider the unlikely event of unavailability of the entire asia-northeast1 region, which means we've lost one replica of the range and are left with two. The replica in asia-east1-a becomes the leaseholder. Reads are still served without crossing the Pacific, but writes now temporarily require the second remaining replica (us-west1-a) to reach consensus.

Image 3: Data center failure: temporarily under replicated

Eventually, CockroachDB will replace the missing replica. This uses network bandwidth and other resources, so it waits to do this for five minutes (by default). If the outage ends before the five minutes elapses, the original replica is reused. Otherwise, a replacement replica must be created and as always, it is placed to ensure maximum diversity. In this case, a different availability zone is the best we can do (asia-east1-a instead of the already existing gateway node in asia-east1-b).

Image 4: Data center failure: fully replicated in extended region outage

One region and multiple cloud provider deployments

You may wonder, what happens if my geographic area has only one region?

At the time of writing, both Amazon and Google each offer a single Australian region (though as we’ve seen, many other continents have more than one). Australian users can run CockroachDB across different cloud providers because it is not only cloud-agnostic but also supports multi-cloud deployments. You can assign some nodes to the Amazon Australia region and some to the Google Australia region. You could also stay within one cloud provider but expand to a nearby region that's not in Australia, for example, the previously mentioned Asia-Pacific region.

Final Thoughts

Ultimately, the distance inherent in global deployments means developers must always make a tradeoff between availability and latency. In typical applications, read queries are far more common than writes. This may allow for some applications to configure reads as Asia-Pacific local (only one replica located in Asia-Pacific) but writes with one cross-Pacific hop (as the majority of replicas are not in Asia-Pacific).

No matter the unique details of your application, CockroachDB offers you the tools to make the best tradeoff for your application.

You can try geo-partitioning before it’s available in GA by downloading the latest 2.0 beta and signing up for a free 30-day enterprise trial.

Illustration by Lea Heinrich