Before he joined DoorDash as principal engineer, Alessandro Salvatori thought databases were boring. Then, he found out about the surprise parties.

“Shortly after I joined,” he quipped, “I realized that DoorDash had a tradition of throwing a ‘surprise party’ at a random time leading to our weekly [order] peak on Fridays. Typically, our main database would crash and burn.”

These crashes led to hours of downtime, Salvatori said in his talk at CockroachDB’s RoachFest conference this year. “Every engineer at the company would stop what they were doing to try and help get our systems back.”

“That’s when I realized that databases could be very interesting,” he said.

Aurora Postgres in DoorDash’s original architecture

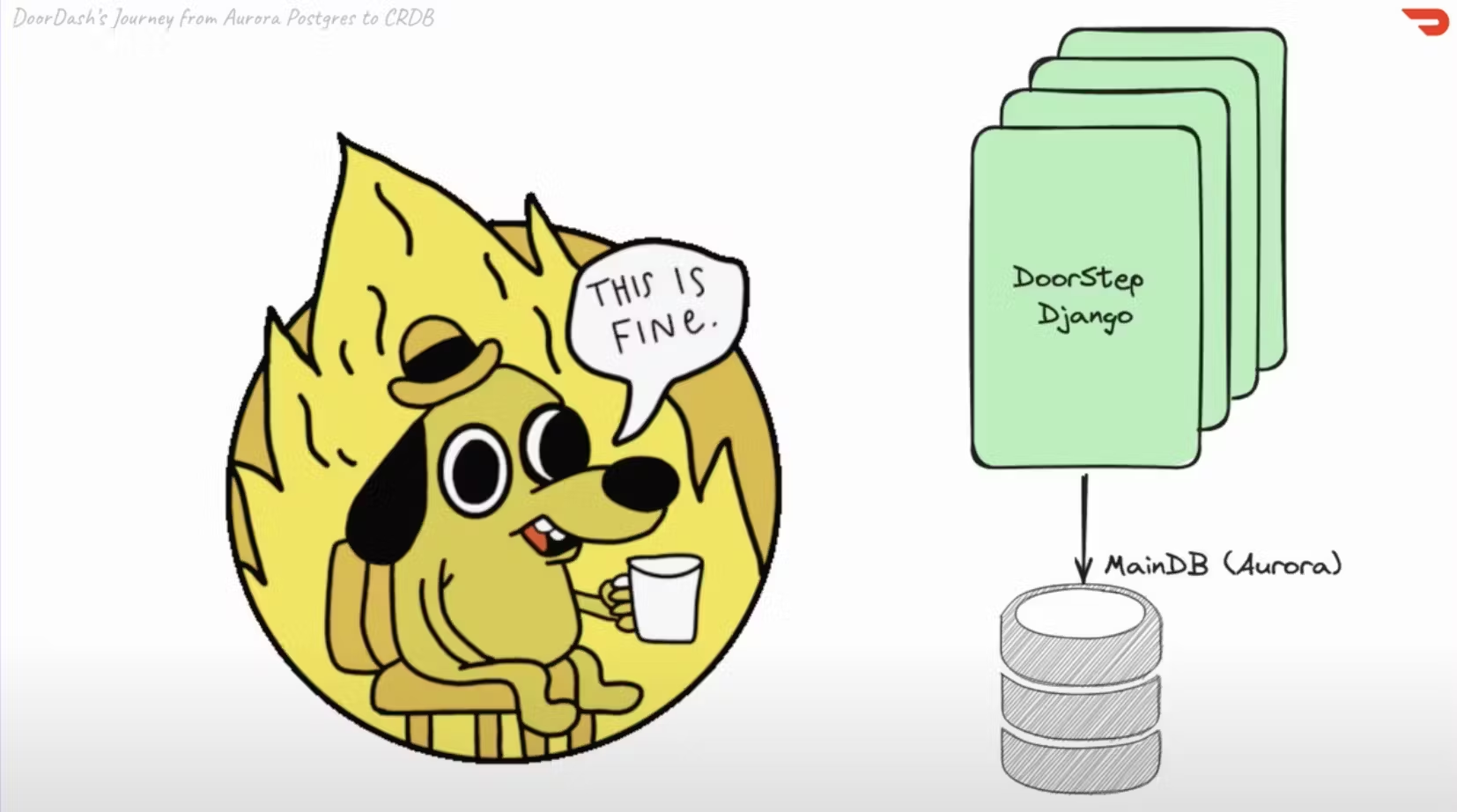

The root cause of these Friday ‘surprise parties’ was DoorDash’s initial architecture. According to Salvatori, the original DoorDash was basically a monolithic Python application linked to a single Aurora Postgres cluster.

The monolithic architecture was one problem, and one that DoorDash was already actively in the process of fixing by the time Salvatori joined the company in 2018. But the move towards microservices architecture did nothing to mitigate the application’s reliance on a single Aurora Postgres cluster.

The problem, at its core, is that while an Aurora Postgres cluster may have many nodes to handle reads, only a single node – AWS calls it the primary instance – can serve writes.

Having a single write node creates a bottleneck for write-heavy workloads, or any workload with significant volume. Even before the pandemic DoorDash was growing so quickly that vertically scaling this primary node wasn’t enough to keep up (and that wouldn’t have been a long-term solution anyway).

One solution was to create additional Aurora Postgres clusters, and extract specific tables from the main database to these spin-off clusters to reduce the load. For example, identity management was moved out of the monolith and into its own service on the application side, and a separate identity database cluster was created to serve that service, reducing the load on the main database. But, Salvatori says, this wasn’t enough. They were still playing “whack-a-mole” with database issues, and struggling to keep up with their rapidly-growing userbase.

The moment of truth: a pandemic success disaster

It all came to a head, Salvatori says, on April 17, 2020. In those early days of the pandemic, much of the United States was locked down. With millions of hungry restaurant-goers now confined to their homes, the popularity of DoorDash had skyrocketed, and its Aurora Postgres clusters simply could not keep up.

April 17 was a Friday – always DoorDash’s peak day – and users poured onto the site to order food. At the peak, the main database cluster was being hit with more than 1,6 million queries per second. It simply couldn’t handle that. “It crashed and burned,” Salvatori says, “and we went down for hours.”

It was what is sometimes called a success disaster – DoorDash was doing so well that it knocked their database, and by extension their entire application, offline.

The losses from this outage, in terms of both revenue and reputation, were substantial. It was clear that something had to change. It was also clear that any system with a single write node probably wasn’t ideal for the kind of scale DoorDash needed.

In its approach to relational databases, the company needed a new “north star.” It found one, Salvatori says, in CockroachDB.

Migrating to CockroachDB

CockroachDB is attractive to companies at DoorDash’s scale for lots of reasons. But for DoorDash, one thing in particular stood in stark contrast to their experience with Aurora Postgres: in CockroachDB all nodes can serve writes simultaneously.

In other words, CockroachDB could both eliminate the write bottleneck imposed by Aurora Postgres’s single-primary-instance limitation and facilitate easier horizontal scaling, since additional write capacity can be added simply by adding nodes.

Of course, DoorDash’s move to CockroachDB was measured, and it took time. At first, the company focused on building a tool to reliably extract tables from its main database cluster to separate Aurora clusters, ensuring easy and lossless reversion in the event something went wrong. After some initial trial and error, they got the tool working and quickly extracted dozens of tables in the span of just a month, giving themselves some breathing room by massively reducing the write load on the primary Aurora cluster.

Ultimately, though, the goal was to move to the “north star” of CockroachDB, and the tool was updated to facilitate an automated migration process that could move tables from Aurora Postgres to CockroachDB losslessly, and with all of the specific requirements that DoorDash had for such a sensitive operation (which was, of course, happening as demand for their service continued to skyrocket).

How did they do it? Listen to Alessandro Salvatori explain it for himself in his RoachFest ‘23 talk: