CASE STUDY

Telecom provider replaces Amazon Aurora with CockroachDB

For an always-on customer experience, a major US telecom provider switched to CockroachDB

Millions of customers

24/7/365 uptime

Multi-region

Overview

A top U.S. telecom provider built a virtual customer support agent to act as a first line of triage for customer requests. Initially, the team built the app on Amazon Aurora, but after an AWS connectivity issue took their entire service offline, they decided they needed a more resilient solution. They turned to CockroachDB for its strong resiliency, consistency, and low-latency reads. The telecom provider implemented CockroachDB in a hybrid, multi-region deployment that can survive data center failures, and they took advantage of the Enterprise geo-partitioned leaseholder feature to achieve high read performance. CockroachDB is now the mission-critical database backing their always-on customer support platform.

Challenge

A large telecom provider wanted to reduce its customer service costs by building an always-available virtual agent, which would provide 24/7 help to millions of customers across the United States. The product relied on keeping metadata about customer conversations in a session database. The telecom team built the first version of the application on Amazon Aurora, in a single cloud region on the east coast of the United States. However, this deployment wasn’t resilient to failures, and when the app lost connectivity to its AWS region, the entire service went offline. The team realized that Aurora’s single-master architecture wasn’t sufficient to attain the always-on customer experience they wanted. They decided to explore other options in hopes of migrating the app.

Requirements

The team had three major requirements for a replacement database. First, they wanted a resilient solution that could survive datacenter or regional failures with no data loss. In the event of a regional failure, they needed their session database to continue serving both reads and writes. This requirement ruled out active-passive deployments.

They also required strong consistency. The team wanted to ensure that a customer session record was never lost if a machine or datacenter failed; no matter what machine or datacenter handled a request, the session always needed to be up-to-date. From a customer experience perspective, a lost session meant starting a new chat, which would be extremely frustrating. This requirement eliminated NoSQL solutions, which are “eventually consistent.”

Finally, read performance was important to the team. As their customer base spans the entire country, they wanted to make sure they could achieve local, low-latency reads anywhere in the United States.

Solution: A Hybrid, Multi-Region CockroachDB Deployment

After evaluating NoSQL and SQL solutions, the telecom team decided to move forward with CockroachDB because it met all their requirements.

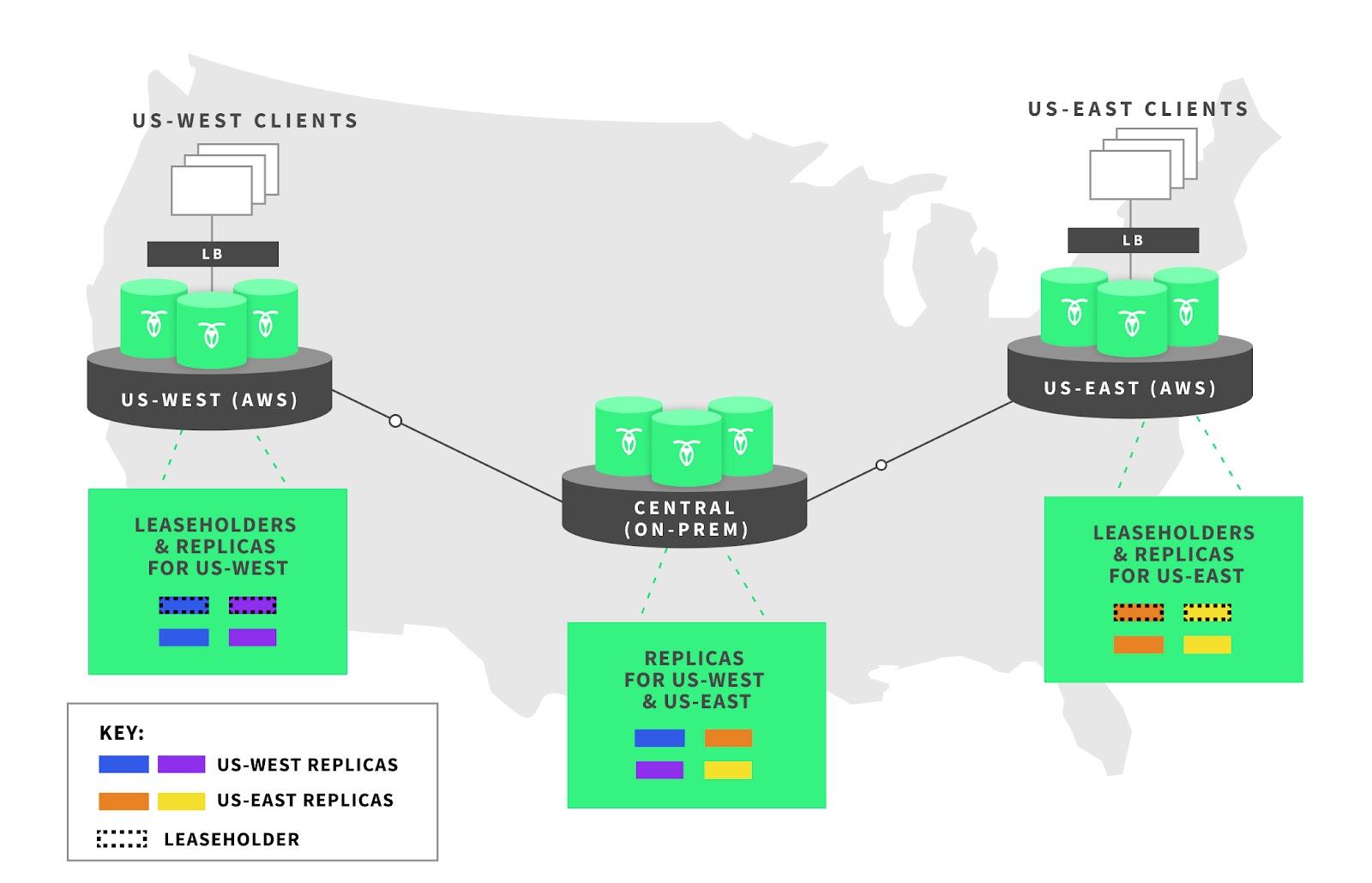

CockroachDB was built to be resilient. It achieves strong resiliency by replicating data three times by default and storing the replicas on machines that maximize geographic diversity. To allow data to survive an entire region’s failure, the telecom company added two regions to their existing infrastructure, bringing the total number of regions to three. While they could have added two additional cloud datacenters, the team decided to save money and use their own on-prem datacenter for one of the new regions instead. This created a hybrid CockroachDB deployment. In their setup, load balancers route client requests to the cloud datacenters on either end of the U.S., depending on where the customer is located, while the central on-prem datacenter is just used to achieve quorum among CockroachDB nodes. This architecture has allowed the telecom company to solve their resiliency issues.

CockroachDB guarantees serializable isolation by default, so no additional work was required to meet the app’s strong consistency requirements. If a machine, datacenter, or region goes down, CockroachDB ensures the customer sessions are not lost and will be available even when the request must route to another datacenter.

With their survivability and consistency goals met, the telecom team wanted to make sure they could maintain local performance, even with their sessions database spread around the U.S. The team was willing to pay a slightly higher latency cost for writes to ensure the database could be resilient to regional failures; however, they wanted to configure CockroachDB so reads could be served locally. To achieve this goal, the team took advantage of geo-partitioned leaseholders, a feature in CockroachDB Enterprise.

The telecom provider’s cluster uses CockroachDB’s geo-partitioned leaseholder topology. For any group of three replicas, the leaseholder and one replica are pinned to the region nearest the clients, while the other replica is pinned to a separate region. This configuration ensures both local reads and survivability.

Using geo-partitioned leaseholders, the team assigned locations to leaseholders in a way that maximizes read performance. By default, only one of any three replicas in CockroachDB is allowed to handle reads and writes for its corresponding segment of data. This replica is called the leaseholder, and it sequesters control over reads and writes in order to maintain consistency. Different rules apply to reads versus writes. Reads can return from the leaseholder directly without the need for communication with the other replicas. However, for a write to be acknowledged, the leaseholder must communicate with the other replicas, and the majority of replicas must achieve quorum. This consensus requirement can result in high latency for writes when replicas are far apart.

The geo-partitioned leaseholders feature allows developers to create rules that pin leaseholders to specific locations. In this case, the telecom company keeps leaseholders for customer session records in the cloud datacenters closest to where individual customer requests originated—on either the east coast or the west coast. This strategy gives their customers optimal read performance for the duration of their support session. Meanwhile, both east coast and west coast writes need to travel to the central on-prem datacenter to achieve quorum (CockroachDB commits as soon as the closest replica acknowledges a write to the leaseholder, rather than waiting for all replicas to acknowledge the write). Writes therefore must complete a round trip in tens of milliseconds, while read performance is local. In this way, CockroachDB met the telecom team’s third requirement, for local reads without sacrificing consistency or resiliency.

Results and What's Next

With their hybrid, multi-region deployment, the telecom team has achieved the resilience, consistency, and read performance they needed, while only incurring a slight increase in write latency. CockroachDB is now the mission-critical database backing their customer support platform, and it can scale out to handle any demand for more users.

The telecom team has already identified other workloads that would benefit from CockroachDB’s architecture and is looking to standardize more of their application backends on the database.