CockroachDB: Distributed SQL Database

The most highly evolved database on the planet. Born in Cloud. Architected to Scale and Survive.

What workloads are right for CockroachDB?

CockroachDB is in production across thousands of modern cloud applications and services

General Purpose Database

Trust CockroachDB as the primary data store for even your most mundane apps and services

System of

Record

Store and process mission-critical data with limitless scale, guaranteed uptime and correctness

Transactional

Database

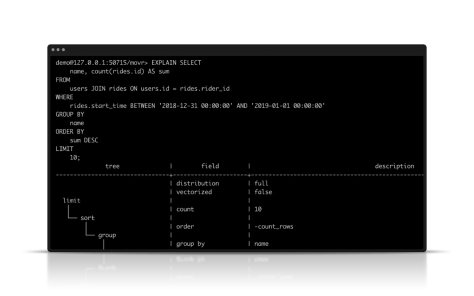

Manage transactional data and perform basic analytics in the database

Capabilities

Build, scale and manage modern, data-intensive applications

CockroachDB delivers Distributed SQL, combining the familiarity of relational data with limitless, elastic cloud scale, bulletproof resilience… and more.

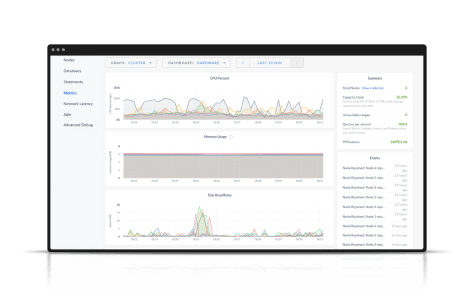

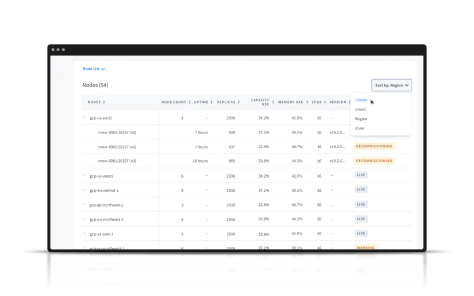

Scale fast

CockroachDB makes scale so simple, you don't have to think about it. It automatically distributes data and workload demand. Break free from manual sharding and complex workarounds.

Learn more

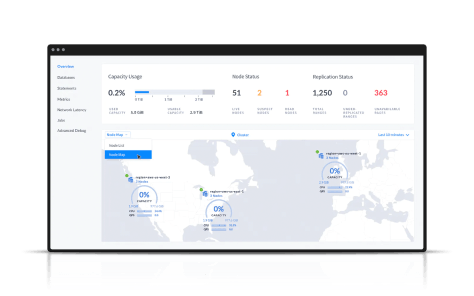

Survive any failure

Downtime isn’t an option, and data loss destroys companies. CockroachDB is architected to handle unpredictability and survive machine, datacenter, and region failures.

Learn more

Ensure transactional consistency

Correct data is a must for mission-critical and even the most mundane applications. CockroachDB provides guaranteed ACID compliant transactions -- so you can trust your data is always right.

Learn more

Tie data to location

Where your data lives is critical in distributed systems. CockroachDB lets you pin each column of data to a specific location so you can reduce transaction latencies and comply with data privacy regulations.

Learn more